A level results day generally brings an air of mild disappointment to mid-August. On average, most candidates will under-perform their predicted grades by about two and a half points.

And if you look at overall proportions the problem appears to be more pronounced for more advantaged students – it would appear that those in POLAR3 quintile 1 have a greater difference, on average, between their predicted and actual grades than those in POLAR3 quintile 5. The general consensus here is that this forms a coherent progressive argument for post qualification admissions (PQA).

What does the data say?

A cursory glance at these graphs should indicate one problem – the predictions and actual results show very similar patterns. First let’s look at the proportions of actual and predicted grades for students accepting places via UCAS (you can use the controls to switch between acceptances and applications, and to see how proportions have changed since 2010). It demonstrates the small difference between the predicted-actual gaps for P3Q1 and P3Q5.

But if you look at the raw numbers you see that this evens out. So, whereas predicted grades are a poor prediction of actual A level grades (if broadly consistent in the magnitude and direction of their error) they are a very accurate prediction of the influence that disadvantage has on A level attainment.

There are slight differences in magnitude – but given the sizes of these group it does not appear that these are significant.

Here it is in tabular form for 2017:

| Polar 3 quintile | Mode of predicted points | Mode of actual points | Difference | Number of acceptances in quintile (predicted) |

|---|---|---|---|---|

| 5 | 15 | 12 | 3 | 53,995 |

| 4 | 14 | 11 | 3 | 37,055 |

| 3 | 13 | 11 | 2 | 28,360 |

| 2 | 13 | 11 | 2 | 20,665 |

| 1 | 12 | 9 | 3 | 13,835 |

When predictions are too accurate

I’ve included a column for the overall number of students as this leads us in to our other issue – do less students from POLAR3Q1 backgrounds apply to university because their grades are, on average, worse than their POLAR3Q5 peers?

With significantly less P3Q1 applicants applying to university, you would think that you would see there a general skew towards the higher end of the points spectrum. The fact that there is not suggests ambition (or “grit”, if you prefer) is not the problem. As the share of the young population skews towards wards with lower participation (see figure 3 here) we are faced with the uncomfortable truth that P3Q1 students just don’t tend do as well at A levels.

You get in to some shady territory here – so let’s be clear that there is nothing to suggest that a lack of innate ability is at the root of this. It’s far more likely that A levels are (unconsciously) designed to suit the kinds of people who traditionally do well at A levels. But if you’re not going to do as well at A levels, you are less likely to apply to university. Or a highly selective university.

Because the difference between predicted and actual grades is fairly constant against all POLAR3 quintiles, we can’t blame over-prediction amongst more advantaged quintiles as underpinning higher participation rates at such universities. Everyone is over-predicted by around two and a half points, and it would seem that this over-prediction is priced in as institutions take lower points than they otherwise ask for – either from candidates they have already made a (higher) offer to or from those entering through clearing.

Gill Wyness has examined these and related phenomena for the Sutton Trust. She clearly has been permitted access to individual student records- giving her the ability to match individual student prediction and performance- and has thus performed a much more thorough analysis than my introduction here. Her findings are that a very small number of high performing students from Q1 may be disadvantaged by lower predictions. Looking at those from Q1 scoring over AAB in 2017, this amounts to 2880 students achieving such grades, compared with 5380 from Q1 predicted them.

But what if the problem wasn’t the predictions?

So what is an A level predicted grade? That’s easy – a prediction of the grade someone will get in their A level, you may say. But in practice the prediction contains a lot more information about a prospective student than the actual grade.

Though A level grades are a defined and measurable quantum of information, what they signify is a little more slippery. A level grades measure performance in A level assessments, which is generally seen as a usable proxy for a person’s aptitude for higher study (in terms of existing accomplishments, capacity for learning, and commitment to hard intellectual work). In recent years, this distinction has somewhat collapsed – with the signifier collapsing into what is signified.

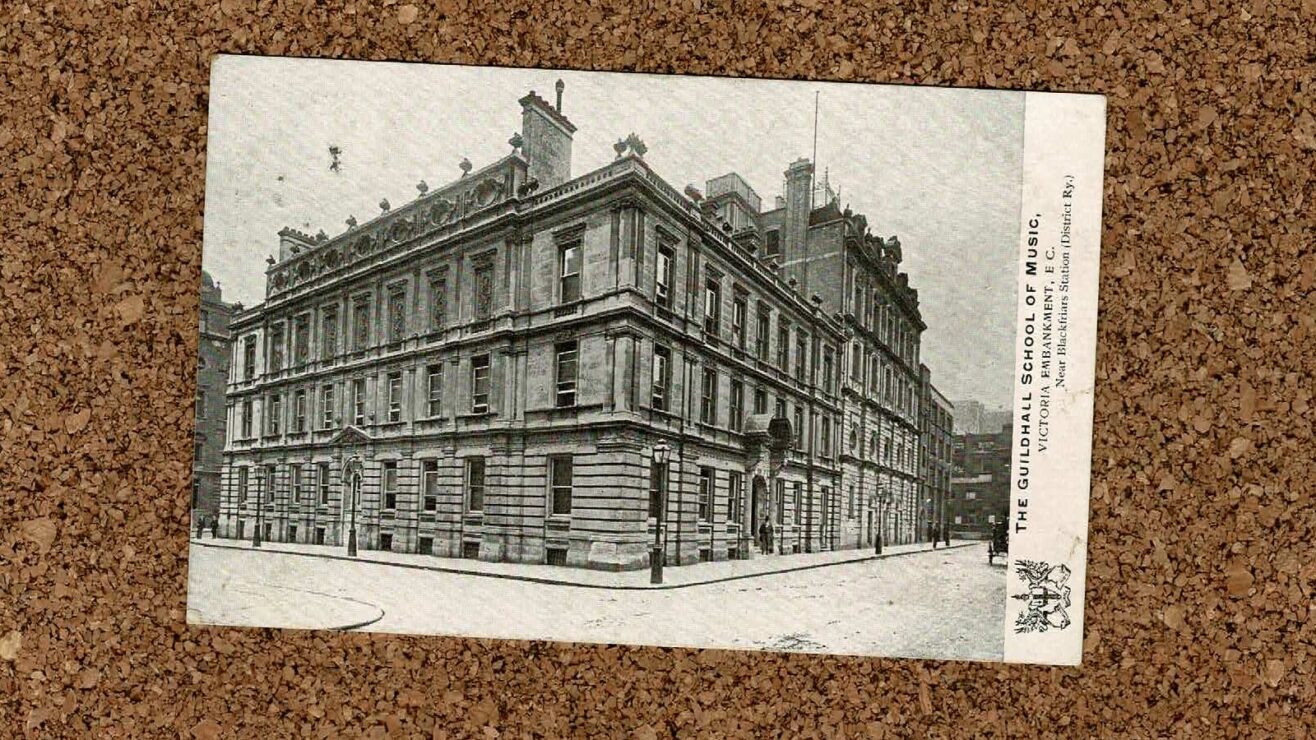

Institutions also use a wide range of other information to choose who to make an offer to. For some subjects other tests may be more appropriate – performance at interview, technical or vocational qualifications, wider life experience, a portfolio of work, an audition… even the dreaded UCAS statement. From music colleges to Cambridge colleges, a person (not a bundle of A level results) is offered a place – and, of course, a person will choose whether or not to accept.

So, our “gold standard” qualification could be seen as a poor proxy for measure of the opinions of those who best understand the intellectual and creative capacity of a young person they know well, which – in itself – is one of several quanta and qualia used to decide whether to make an offer to a given individual at a given time.

Time to leave the gold standard?

It is sad, but symptomatic of a wider societal issue, that both grades and predictions have a relation to an individual’s background and history. The argument to move to PQA is that in removing the more subjective elements of a predicted grade, we lose this effect. But the data doesn’t back this up, because A level grades also have a bias against those from backgrounds with less experience of HE. They are not a pure measurement of academic potential unless you happen to believe that such potential is unequally distributed across POLAR3 quartiles.

Perhaps we need to leave the gold standard, and ask whether A levels (predicted or actual) are really the best yardstick for determining institutional admissions? And perhaps the rise in unconditional offers may be the best thing that has happened in widening access for years.

Has there been some confusion of the Polar 3 quintiles in the second paragraph of the quote below? Surely fewer P3Q1 students apply and it is P3Q1 students who tend to do less well?

“I’ve included a column for the overall number of students as this leads us in to our other issue – do less students from POLAR3Q1 backgrounds apply to university because their grades are, on average, worse than their POLAR3Q5 peers?

With significantly less P3Q5 applicants applying to university, you would think that you would see there a general skew towards the higher end of the points spectrum. The fact that there is not suggests ambition (or “grit”, if you prefer) is not the problem. As the share of the young population skews towards wards with lower participation (see figure 3 here) we are faced with the uncomfortable truth that P3Q5 students just don’t tend do as well at A levels.”

You are right. Something clearly got confused there. I’ve fixed it now – thank you!