We’ve been talking quite a bit about community and belonging here at Wonkhe towers over the past few years.

From our Only the Lonely research with Cibyl back in 2019, to work during the pandemic in 2020 through to the Four Foundations of Belonging that we worked on with Pearson last year, our subscriber students’ unions have been helping us to research the way in feelings of connectedness and community relate to student mental health, confidence and student success.

But I wasn’t prepared for this.

Not another survey

Over the past few months, we’ve been working with our partners at Cibyl and group of “pioneer” SUs on a new kind of monthly student survey called Belong that we’re launching formally at this year’s Secret Life of Students – designed to help SUs and their universities not just know students’ opinions, but to understand and learn from their lives too.

We’re on a mission to improve and enhance student representation across the UK.

As part of that we’ve been gathering both quantitative and qualitative feedback on everything from teaching quality to assessment and feedback, and from feelings of belonging to the burdens they face when trying to succeed in the teeth of a cost of living crisis.

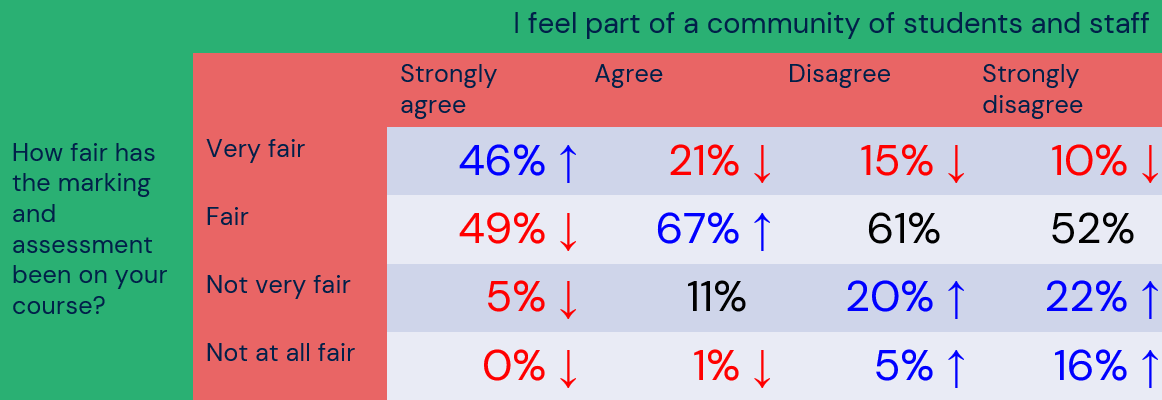

On the quan, there I was a few weekends ago, working up some crosstabs for slides based on the sorts of things I figured would be interesting – differences by characteristic, or the links between community and mental health, and I accidentally slipped and got the software to display me this:

It was fascinating for me for a couple of reasons. We’ve been developing our understanding of community and belonging for some time – and have bemoaned the casual way in which the Office for Students abandoned the corollary question in the new version of the National Student Survey.

We knew about the link between feelings of community and outcomes confidence, we knew about the relationship between it and wellbeing, and we knew about the way in which students’ background, characteristics and activities were contributing.

But we hadn’t really ever imagined that there might be a significant link between belonging and perceptions of things like assessment fairness. It just wasn’t a hypothesis I’d ever considered. But I should have.

Our achilles heel

A number of years ago now I worked as a CEO at the students’ union at UEA, where one year the university had made significant progress on the sector’s traditional NSS achilles heel on timeliness (NSS Q10) and usefulness (NSS Q11), but not so much on fairness (NSS Q9).

It had long been a frustration of mine that OfS (and HEFCE before it) had never published a proper national analysis of the free text comments accompanying the NSS – robbing us of intel over why students score the way they do. So the following December, for one of the SU’s weekly “get out and talk to students” surveys that al SU staff had to spend an hour gathering responses to, assessment fairness was the topic of the week.

I dug out the “flash results” slides that we used to punt out around the university on a Friday afternoon the other day – and we’d concluded from the qual conversations that there were three big explanations:

- A lot of it was about a link back to the criteria being clear in advance – and for many students “unfairness” was about a sense that the application of that criteria wasn’t clear or consistent

- Some of the discussion was about the consistency of assessment design across the university

- But the number one reason we identified was about consistency between markers and courses

What wasn’t on the slide was “I feel part of a community of staff and students”.

I checked the data, and ensured I’d applied the right weightings. I looked at the source data to check that I’d not somehow renamed a column before uploading or something. I checked that the sample we were using was nationally representative – and yes, we’d not over weighted the responses from the pioneer SUs. So what was going on?

Qual assurance

In the design of our new research project – which will allow subscriber SUs to access a free monthly survey product that allows benchmarking over time and with students from across the UK – we’ve been determined to gather almost as many qualitative responses as we have quantitative.

And when I returned to the desktop to slice out the free text on assessment fairness, as well as the familiar themes above, the AI suggested that two more were lurking in the words.

One was the idea that students believe that the marker (or indeed those designing assessment) hadn’t taken into account the student’s circumstances properly or fairly.

So one student said that taking the first half of their course online due to visa issues caused a huge gap in their experience in comparison to their class – rendering their assessment unfair.

Another said that marks “often feel like a score for participation” which they said was unfair because “not all of us are able to be there all the time that some can”.

And another said that the deadlines had fallen for assignments at a time when their regular source of childcare had let them down – something they assumed they wouldn’t get an extension for.

The overwhelming sense from many of the comments was that either because of inflexibility or a lack of understanding, they’d been unable to perform to the best of their ability and that hadn’t been taken into account by their university.

And the other aspect in the qual? Community.

One, when asked why they’d responded on assessment fairness just said “I’m not one of the favourites.”

Another said that they didn’t have anyone to ask questions of – they’d not made connections on the course and their tutors seemed too busy to ask.

And another just said “[The ones that do better] well they have personal connections, I don’t”.

In response after response it was clear that students who described isolation were unsure of what was expected, not clear on how they might overcome it and left to ruminate on poor marks without a way in which they might work with others to become more confident about going again.

It turns out that feeling part of a community of staff and students is essential to feelings of fairness in the assessment design, marking and learning from the feedback given.

And the worst part? I’d missed it.

What do you need them for

Up in the attic, I still have boxes and boxes of stuff from previous jobs – and one of the boxes had in it folders full of anonymised survey responses from that “Quality Conversations” project at UEA all those years ago.

Plenty of the forms reflected those three bullets from the slides I’d found. But there, hiding in plain sight, were plenty of other comments too – comments that we ought to have spotted at the time that were remarkably similar to the qual I was staring at on the screen.

My guess? I wasn’t looking for them.

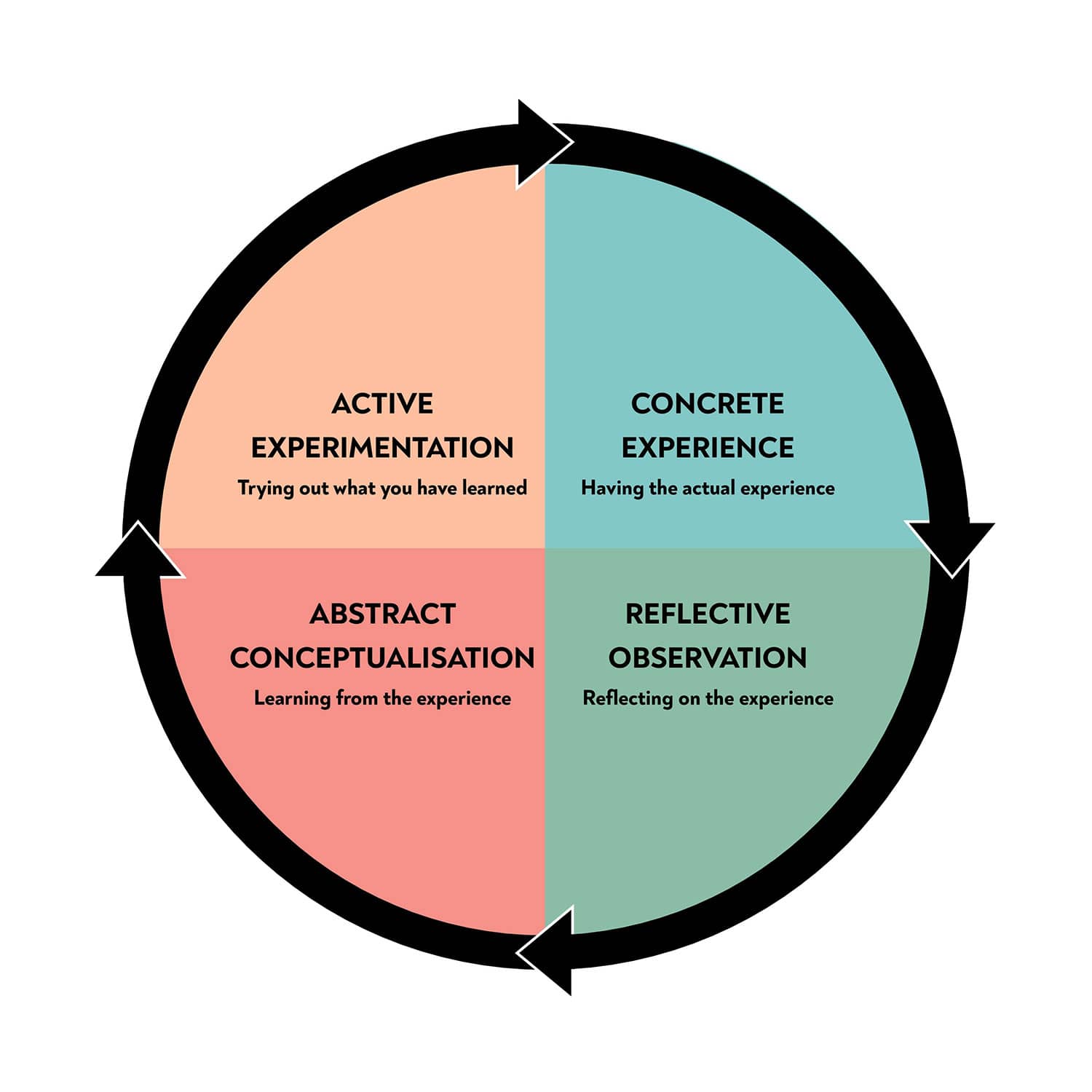

When I’m explaining Kolb’s learning cycle to student leaders over the summer, I often lament that students’ unions and universities don’t seem very good at learning.

There’s plenty of “concrete experience” on the hamster wheel of the academic year, but when the survey results appear and the reports are created, the “reflective observation” feels rushed, the “abstract conceptualization” feels thin, and the yearning for “what other universities do” as a replacement for “actively experimenting” with approaches a real buzzkill.

It doesn’t have to be this way. When students are engaged in survey design, they often ask about things that others would never have thought of. When student representatives help out with analysis, we get hypotheses that reflect reality rather than the rose tints of the glasses of those often writing the questions.

When students are treated as lab rats, we are destined to make the same mistakes over and over again. When we treat them as partners, we can learn a lot from them.

So in our survey product Belong – which SUs are able to sign up to be part of as of today – we’ll be putting students in the driving seat, asking them to help choose our monthly focus themes, develop lines of inquiry, help us with theories and drive ideas for policy change.

Developing bogus, simplistic snapshots of what “students” are like is off the table. Understanding why students score, attain and feel they way they do is very much on it.

We couldn’t be more excited about sharing more insights from it soon – and our promise to anyone interested in taking part is simple. We’ll never make the same mistake that I did all those years ago at UEA. We’ll learn.

You can find out more about Belong in our launch brochure here.

Your question “How fair has the marking and assessment been on your course?” applies to school students sitting GCSE, AS and A level exams too. Especially when linked to the question “Do you know that school exam grades are only reliable to one grade either way?”.

The assertion that school exam grades are “reliable to one grade either way” is well-evidenced, for those are the exact words used by Dame Glenys Stacey, the then Chief Regulator of Ofqual, in her evidence at a hearing of the Education Select Committee held on 2 September 2020 in the aftermath of the ‘mutant algorithm’ fiasco (see Q1059, https://committees.parliament.uk/oralevidence/790/pdf/).

The BIG PROBLEM, of course, is that there is only one grade on the certificate.

And it is on that single grade that the student is judged.

That single grade, though, is only “reliable to one grade either way”. Is this unreliability factored in to the HE admissions process?

https://wonkhe.com/blogs/pqas-underlying-and-false-assumption/

A very good article. High assessment results largely come down to a set of factors.

I just wonder whether it’s worthwhile that universities, as well as employers for that matter, know that exam grades are “reliable to one grade either way”. Perhaps Ofqual should publish this in big capital letter on each exam certificate, since they themselves have acknowledged this fact?

Assessment at university needs to be fairer but what if the ‘right’ people, grades-wise, aren’t there because of a faulty exam system which Ofqual seem extremely reluctant to change (despite the numerous time it’s been flagged!)?