The robustness of published scientific research is in the news again. A systematic attempt to replicate the findings of 21 experimental studies in the social sciences published in the prestigious journals Nature and Science found that only 62% replicated, and the size of the effects observed was systematically weaker.

This mirrors the results of similar projects in fields ranging from psychology to cancer biology, and broadly supports the claim made over ten years ago by John Ioannidis that most published research findings are false. Indeed, concerns about the quality of published research are such that the Science and Technology Committee recently held an inquiry into research integrity.

No rewards for correct answers?

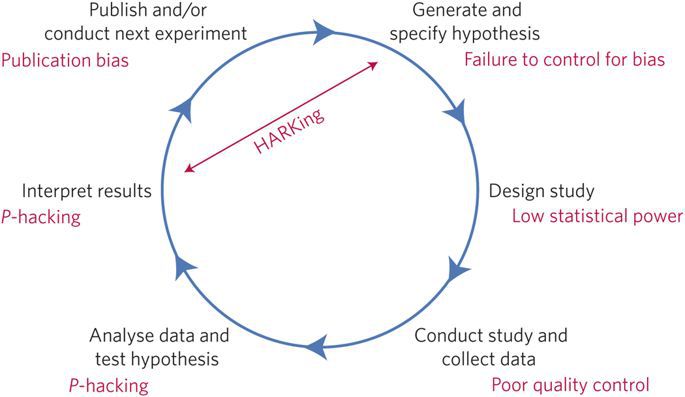

Various explanations have been offered for why published research might be less robust than we would hope – small sample size, herd behaviour around “hot” topics, lack of statistical training and so on. But these are all proximal causes. What are the deeper issues that give rise to these problems? Could the structure of academia, and in particular current incentives, shape the behaviour of scientists (unconsciously) in ways that might be good for their careers, but aren’t ultimately good for science? After all, while nearly all of us go into science wanting to find out something new, interesting, and hopefully important, in our day to day work we’re not actually incentivised to get the right answer.

At the moment we’re rewarded for publishing, getting grants, even getting our research into the news. All of these things lead to promotion, prestige, and invitations to speak at conferences in nice places. But when are we rewarded for being right? There are a few major prizes (most obviously, Nobel prizes), but the vast majority of current incentive structures focus on proxies like publication. Various attempts to model the scientific ecosystem support this point of view – current incentives lead to lower quality research. Worse still, bad science might actually result in a competitive advantage, which in turn will mean that those ways of working will proliferate – the “natural selection” of bad science.

Manifesto pledges

What can we do? A group of UK researchers recently published a Manifesto for Reproducible Science, where we argue for the adoption of a range of measures to optimize key elements of the scientific process: methods, reporting and dissemination, reproducibility, evaluation and incentives. Crucially, most of the measures we propose require the engagement of funders, journals and institutions. However, while many funders and journals have begun to engage seriously with these issues – increasing the use of reporting checklists, and allowing the inclusion of methodology annexes on grants, for example – most institutions have been slow to do anything. And institutions control the strongest incentives – hiring and promotion.

We identified two broad areas where institutions could foster higher quality research – methods (e.g., by providing improved methodological training, and promoting collaboration and team science), and incentives (e.g., by rewarding open science and reproducible research practice). Open science practices, for example, have been argued to improve quality control – transparency results in errors being more likely to be identified, and researchers are therefore more likely to quadruple-check their work where previously they might only have triple-checked it! But open science practices are not typically listed as essential or desirable criteria in job descriptions, or rewarded in promotion applications.

Better use of metrics

Fortunately, several initiatives exist that can support universities keen to improve their research quality and culture. The Forum for Responsible Research Metrics promotes the better use of metrics, for example in recruitment and promotion procedures, and in 2018 hosted “The turning tide: a new culture of research metrics”. This highlighted that more effort is needed to embed relevant principles in institutions and foster more sensitive management frameworks. And in mid-September the University of Bristol will host a workshop for key stakeholders (funders, publishers, journals) to discuss the formation of a network of universities that will coordinate and share training and best practice.

This network will be an academic-led initiative, ensuring not only that efforts to improve research quality are coordinated, but also that they are evaluated (given that well-intentioned efforts may lead to unintended consequences), and designed with the needs of the academic research community in mind. It will allow the myriad of new initiatives across the UK to be brought together, and new initiatives to be developed. The current focus on the quality of scientific research is best understood not as a crisis but as an exciting opportunity to innovate and modernise. UK science has a well-deserved reputation for being world-leading, but to remain world leading we will need to embrace this opportunity.

At a time when universities are striving to adapt to a new marketized climate, it is easy to lose sight of why universities exist – to produce knowledge, through teaching and research. Published scientific research will never be absolutely robust – there will always be a need for exploratory research and for a degree of risk, which will mean that some findings turn out to be false. But if knowledge is our product, and research one of our product lines, then we need to ensure we have a high-quality product if we are to survive. At the moment, many of our ways of working, and therefore our quality control processes, are rooted in the 19th century and not up to the job. We need to take this seriously if we are to survive.

The objective here is very laudable. The question is whether this network is really taking its task seriously. One thing that concerns me is that the University of Bristol itself has a unit called Bristol Randomised Trials Collaboration that supports trials in the field of psychology in adolescence that suffer from some of the worst flaws you are discussing (MAGENTA and FITNET for instance). How come this unit has not been taken to task? And why did Dorothy Bishop come to the defence of the investigator when approached by Science Media Centre? One has to ask whether this is all window dressing.

Many of the points made in the manifesto are cogent. The pressure of commercial isation is dominant. So maybe this is not an issue of 19th century ways of working. In my experience standards were much tighter 30 years ago. I think this may be a new 21st century problem. Everybody knew about all these issues long ago. It is just that now people are allowed to get away with ignoring them – by overseeing committees and editors who no longer oversee.