The annual HEA-HEPI Student Academic Experience Survey is one my favourite events in the higher education policy calendar. This excellent piece of work has put a spotlight on important aspects of the student experience in higher education such as wellbeing, perceptions of value, views on spending priorities and perspectives of teaching quality.

The survey has also become very influential, and was cited extensively in the recent White Paper, particularly as a justification for introducing the Teaching Excellence Framework. Given its role in the higher education debate, and that the narrative it spawns is exceptionally important for public policy discourse, I’ve picked out five areas of particular interest that will frame future discussions.

Universities must both inflate and also manage expectations

To those who have followed the student satisfaction debate for many years, particularly in relation to the NSS, it will come as little surprise that the strongest correlation with satisfaction was that ‘experience had matched expectations’. Outside of higher education, happiness has been shown to be as reliant on expectations as on experience, and so it seems to be the case for students. Expectation management is therefore a critical matter for institutions seeking to maximise satisfaction.

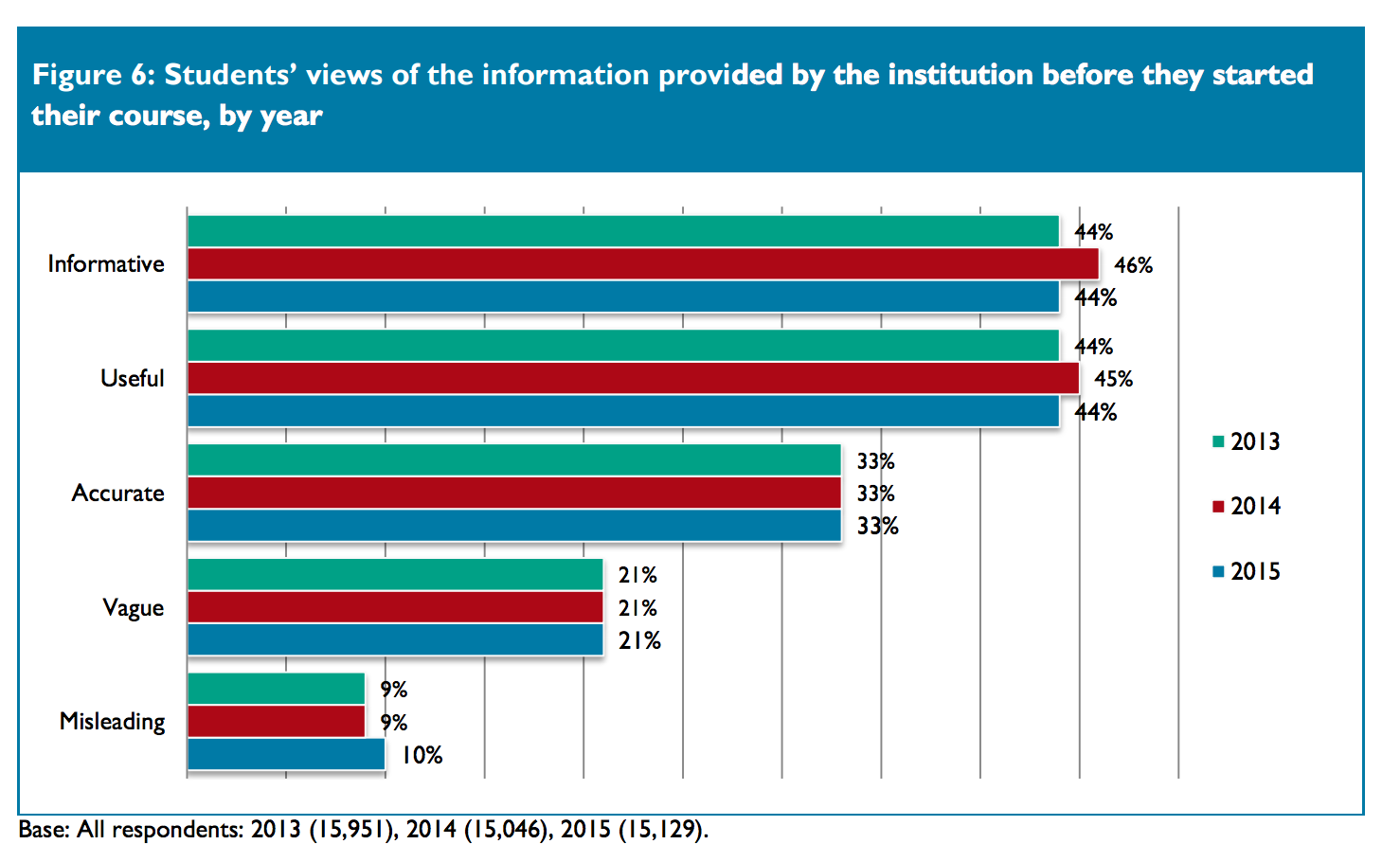

This seems to be a particular challenge in a market environment. Previous iterations of this survey have seen only 33% of students describe information they received prior to their course as accurate. 21% described it as vague, and 10% as misleading. In an increasingly marketised system, institutions are forced to aggressively blow their trumpets with claims about the quality of their academic and student experience in order to get students through the door, but it seems that managing students’ expectations is one of the keys to satisfaction. The government might argue that the continued drive for greater quantities of ‘objective’ public information is the answer, but studies have shown that students are more likely to regret their choices if they ‘maximise’ the amount of information they analyse prior to making a choice.

Source: HEPI-HEA (2015)

Quite how the sector is supposed to square this circle is difficult to answer. The HEA have suggested that it gives institutions the opportunity to promote their “signature brand” of pedagogy, and suggest this year’s survey shows that specialist institutions in particular are effective at doing this.

Contact hours: don’t misunderstand what the problem is

There is often a great deal of unease in the sector about the ‘tyranny of the consumer’, particularly through the blunt instrument of student surveys. This survey’s headline call for more action on contact hours is testament to that, and to my mind much of the sector’s initial reaction has indicated a degree of defensiveness that will do it few favours.

This isn’t to say that universities are wrong in the great contact hours debate. There is very little evidence to suggest that, alone, increasing contact hours improves course quality or student learning. It is clearly more important to consider what happens within those contact hours. From my own anecdotal experience at least, it is very possible to have two different humanities courses with similarly low levels of contact hours, and for the two to vary a great deal in quality and student learning. Graham Gibbs corroborates this:

“The number of class contact hours has very little to do with educational quality, independently of what happens in those hours, what the pedagogical model is, and what the consequences are for the quantity and quality of independent study hours.”

The HEPI-HEA research and many other pieces of student research (or indeed any form of public polling) often see students suggesting solutions to problems that haven’t been identified specifically enough. We might be reminded of the classic scene from Moneyball, where Brad Pitt chastises his team for not being clear about what they want to fix:

Ken Medlock: We’re trying to solve the problem here, Billy.

Brad Pitt: Not like this you’re not. You’re not even looking at the problem.

Medlock: We’re very aware of the problem. I mean…

Pitt: Okay, good. What’s the problem?

Medlock: Look, Billy. We all understand what the problem is. We have to replace…

Pitt: Okay, good. What’s the problem?

Medlock: The problem is we have to replace three key players in our lineup.

Pitt: No. What’s the problem?

When surveys tell us that students think the solutions to a better education are “staff training in how to teach” or “more contact hours”, we may do better to think about the problems that students feel exist rather than their jump towards solutions. On this, I would imagine there is a great deal of alignment between teachers’ aspirations for their students and students’ aspirations for themselves, but quite a communications gap between the two.

| Students say... | Institution response... | What students might mean... | Potential enhancement... |

|---|---|---|---|

| “There weren’t enough contact hours!” | “Students are told at induction that they are expected to undertake extensive independent study.” | “My learning experience was not as immersive as I had hoped. I had little reason to spend much ‘time on task’. I think contact hours are the solution.” | Change assessment patterns to ‘nudge’ students towards greater breadth and quantity of independent study. |

| “There aren’t enough books in the library!” | “We invest extensively in ensuring all core-texts are available in the library” | “When unable to obtain a library book, I was not aware of other potential sources of information” | Integrate research and information location skills into curricula and teaching. |

| “Marking was so unfair and varied so much from lecturer to lecturer!” | “Our standards are assured by external examiners and QAA.” | “I was unaware of the differing assessment criteria for varying assessments” | Publish assessment criteria in accessible language, and relate all feedback directly to these criteria. |

Perhaps students telling us that they want more contact hours or trained teachers is really evidence that students want to be challenged, to have stimulating engagement with their teachers, and to learn and grow. The single most cited reason for expectations not being met was that students felt they did not put in enough effort themselves; this also points to a fundamental alignment of aspirations between students and teachers. That’s not to say that well-trained teachers and a small increase in contact hours won’t be part of the solution in some instances.

However, since the White Paper was released, I’ve witnessed a couple of instances where influential figures in higher education policy have appeared to be inclined to go on a contact hours ‘crusade’ – often confusing contact hours with ‘time on task’ – when trying to justify the TEF. Incentivising institutions to maximise the number contact hours for their own sake will only end in an increased number of boring and relatively unengaging lectures, with relatively low learning value and, one anticipates, low attendance.

Do as we do, not as we say

Institutions are at risk of a ‘do as we say, not as we do’ failure if the solution is constantly trumpeted as merely ‘explaining’ to students why independent study is important. Courses need to be structured in a way that incentivises students to understand for themselves that it is important. Course designers could learn a bit from behavioural economics and nudge theory.

The survey results further underline this, with the correlation between effort and perceptions of value, and also the results on feedback. There is no silver bullet to good teaching and good course design, but if there was one it would be ensuring that there is ample opportunity for prompt and frequent feedback on students’ work. I would argue that the report’s conclusion that “an explanation to students of expectations that are unrealistic” about feedback turnaround times takes the wrong message on this from the data – ‘quick and dirty’ feedback has been shown to be better than slow and detailed feedback.

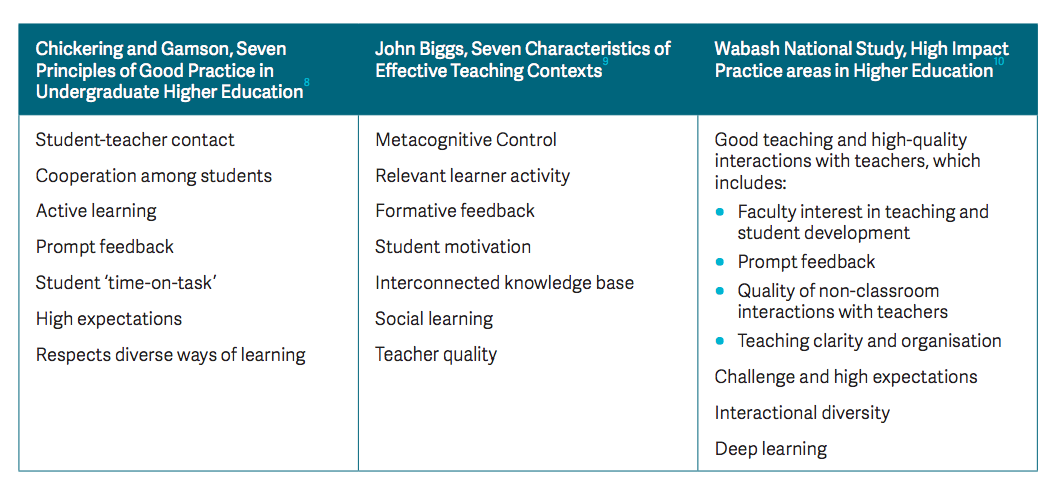

Indeed, the survey’s findings correlate strongly with research into effective student engagement and learning, as well as years’ of NSS data, emphasising “helpful and supportive” staff, “useful feedback” on assessed work, students being “motivated to do [their] best work”, and staff stimulating student interest. These are the very same “small range of fairly well-understood pedagogical practices that engender student engagement” noted by Gibbs as well as in other models based on extensive research. As an aside, note how similar each of the three models are:

Source: NUS (2015)

The overarching lesson here is captured by John Biggs’ model of “constructive alignment”; in short, that an effective higher education course is greater than the sum of its parts:

“Teaching and learning take place in a whole system, which embraces classroom, departmental and institutional levels. A poor system is one in which the components are not integrated, and are not tuned to support high-level learning. In such a system, only the ‘academic’ students use higher-order learning processes. In a good system, all aspects of teaching and assessment are tuned to support high level learning, so that all students are encouraged to use higher-order learning processes. ‘Constructive alignment’ (CA) is such a system.”

Constructive alignment is not something that is likely to be picked up on a student survey, though it would be interesting to see an endeavour to do so. It is probably also something very difficult to be looked at in the Teaching Excellence Framework. Students probably do have an intuitive sense of how well the constituent parts of their course fit together. The crucial point is that students will only understand this through how their course influences what they do, and not merely by what universities and course directors say. Jut being told that ‘independent study is important’ is not enough – courses have to be designed well so that it feels important.

Is value influenced by perspectives on graduate prospects?

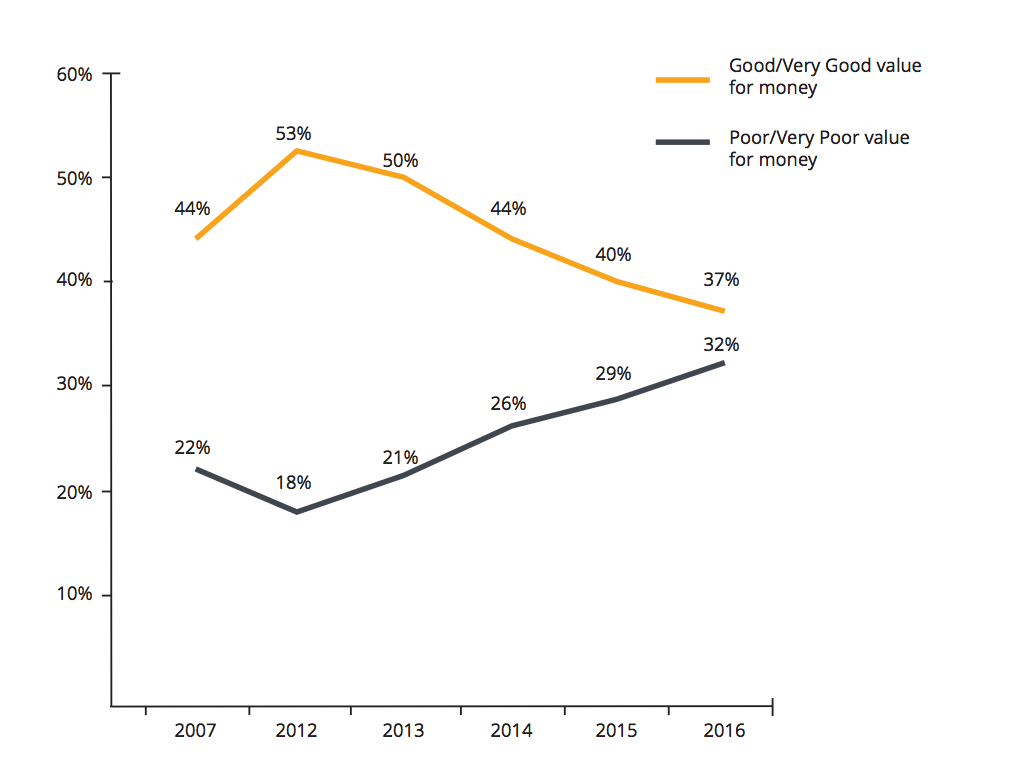

The headlines covering this survey focused extensively on the rapid decline in students’ perceptions of ‘value for money’ over the past few years, particularly in England. Scotland continues to see much higher perceptions of value for money than the rest of the UK, suggesting that this trend is linked at least in some way to tuition fees. ‘Value for money’ was linked strongly to meeting expectations, perceptions of teaching quality and contact hours, and differed wildly by subject studied.

Source: HEPI-HEA (2016)

One other hypothesis that might be considered when it comes to value for money is students’ perceptions of their post-study prospects and whether they expect to fully repay their student loans. An NUS study last year showed that 55% of graduates do not expect to fully repay their loan debts and that the majority of study leavers were anxious in some way about their debts. Other studies from recent years have shown that students have tended to over-estimate their job and earnings prospects, though the increased frequency of stories about relatively bleak graduate prospects may have changed this. It would be interesting to see how students’ estimations of their prospects have changed over recent years and whether they are linked to perceptions of value for money.

Beneath the headlines – insights you may have missed

Besides the headline stories on value for money, teaching quality and expectations being (or not being) met, the HEPI-HEA research had some interesting specific insights into important challenges for the sector.

The survey suggests that students living at home are likely to face particular challenges in being fully engaged with their studies, being satisfied with their course and meeting their own expectations. Crucially, black and minority ethnic students are far more likely to live at home than white students, and as last week’s UCAS data release suggested, are far more likely to study close to home in order to do so. One aspect of tackling the BME attainment gap will be considering how universities improve at engaging these students who live off campus.

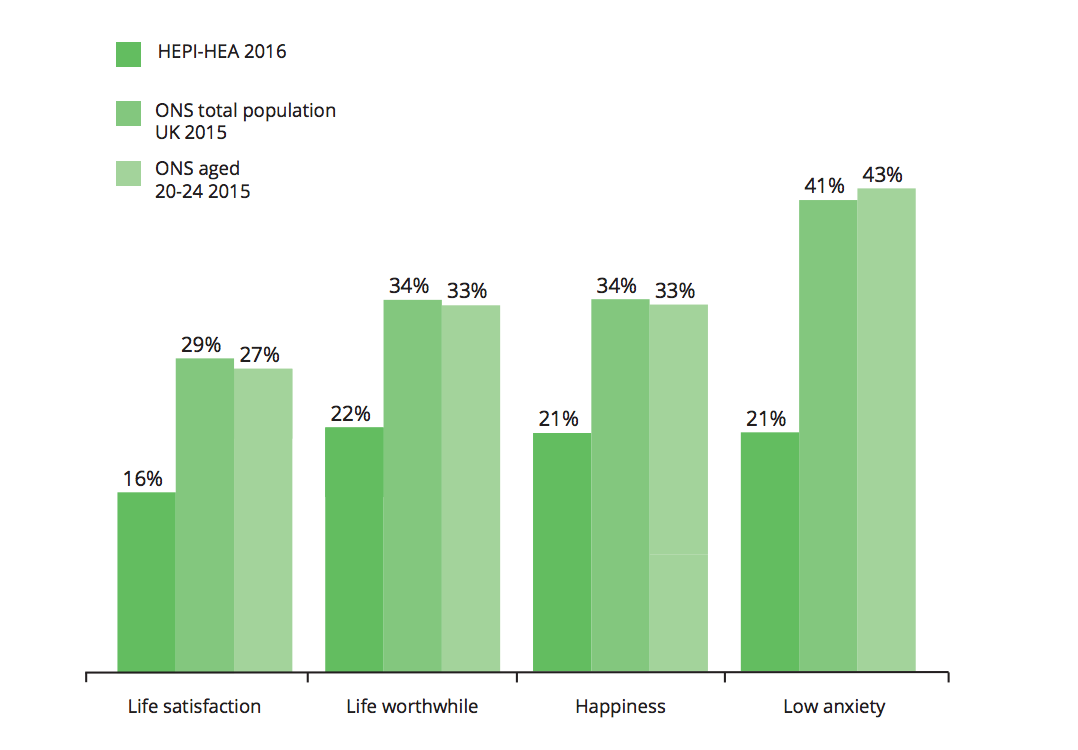

Finally, this year’s report shows continuing high levels of anxiety and relatively lower levels of student wellbeing compared to the rest of the population. Though this may be down to a methodological amendment from previous surveys, it corroborates with other research showing that student mental health should be seen as a ‘red-light issue’ for the sector. With increasing frequency, students’ unions are making mental health a priority issue for campaigning, and organisations such as Student Minds and Nightline are becoming increasingly visible. More research desperately needs to be done into this area to identify causal factors, and HEPI and HEA must be credited with giving the most comprehensive comparison of student wellbeing with the wider population.

Source: HEPI-HEA (2016)

There’s another headline from this survey which would benefit from your approach of “what students/institution say…what they mean…” approach.

People have picked up on the question about “important characteristics of teaching staff”, for which the least important characteristic of those presented to respondents was “they are currently active researchers in their subject”.

Yet the most important was “they maintain and improve their subject knowledge on a regular basis”. If only there was a good way of doing that…

The scores for characteristics being “demonstrated a lot” were almost equal for the two characteristics: 35% for “improve subject knowledge” and 38% “currently active researchers” but I’d love to be able to unpick what differentiates the two as ‘demonstrated’ characteristics. What would that read like as a “what students might mean…”?

Somewhere the link between research and maintaining and improving subject knowledge is getting lost.

We agree with that there is no “silver bullet” to good teaching and good course design. However, student engagement is a critical factor in student learning gain; and engagement of students forms a key part of teacher training courses and continuous professional development.

Recent analysis by the HEA has established a positive link between institutional investment in a professional development programme for teaching staff and strong levels of engagement reported by students in the UK Engagement Survey (UKES).

The research, which was independently verified by Dr Elena Zaitseva of Liverpool John Moores University, found a statistically-significant relationship between high levels of HEA Fellowship and strong UKES scores. UKES measures student behaviours and their engagement with their learning, with the HEA’s research identifying correlation between Fellowship and both teaching and staff interaction.

Investment in teaching means everybody wins: engaged students with better potential for improved outcomes; motivated teaching staff whose work is rewarded; and institutions who are better placed to demonstrate value for money through great teaching.