Longitudinal Education Outcomes (LEO) data enables us to know how much UK graduates of different courses at different universities are earning now, either one, three or five years since graduating. It does this by linking up tax, benefits, and student loans data.

But it’s just as important to state at the outset what LEO is not. LEO does not help us identify the universities with the best or most effective teaching, nor is it a measure of the ‘value added’ by a university degree. LEO is not a performance indicator. It isn’t even a predictor of how much students at any university or on any course will earn in the future: it’s historical data, and future labour market outcomes are arguably too variable to be predicted based on past outcomes.

LEO is phenomenally complicated and ridden with caveats. It’s implications for the future size, shape and funding of higher education could be huge. Wonks and sector leaders will be hoping that this new rich and illuminating public dataset will be used with care and precision by policymakers going forward. There is plenty to consider, and so here we lay out everything you need to know about LEO.

Background

Up until recently, the only available data and statistics on higher education graduates’ employment and salary outcomes was from self-reporting surveys. Most of us recognise this primarily through the Destination of Leavers from Higher Education Survey (DLHE), run by the Higher Education Statistics Agency. The UK Labour Force Survey, run by the Office for National Statistics, has also collected data on graduate employment and salaries through a sampled survey.

The downsides to what we can now refer to as ‘old DLHE’ have been well rehearsed. Though the self-reporting survey has been found to be broadly reliable in comparison to LEO – what the specialists call ‘administrative data’ – there are some discrepancies. Most importantly, the self-reporting survey limited the timescale of available data, reaching at most three and a half years into graduation. LEO has the power to reach much much longer after graduates leave education, and also be much more accurate.

LEO data has been in the works for some years now. A few years ago, a project funded by the Nuffield Foundation, conducted by the Institute for Fiscal Studies, and approved by former Universities’ Minister David Willetts, began the first connection of anonymised data from the English Student Loan Book with tax and benefits data from HMRC and DWP. While this project was underway, the Small Business, Enterprise and Employment Act (2015), passed in the final days of the Coalition, authorised the permanent linking and ongoing publication of this data by DfE.

The Nuffield-IFS project finally published its research last spring, in what was a true landmark for research into graduate outcomes in the UK. You can read Wonkhe’s full analysis of that report here.

After two experimental statistical releases and an informal consultation last year, the first full set of subject and institutional LEO data will be released publicly on June 13th. Though initially only planned for English universities, the Scottish and Welsh funding councils have issued circulars in recent weeks confirming that the release will also include universities from those nations as well. It is expected to cover:

- Full-time and part-time first time study leavers. Importantly, this includes leavers who did not graduate with a degree.

- Bachelors’ degrees only – LEO does not include postgraduate, HND/HNC, and PGCE courses.

- UK domiciled graduates (at time of study) – the loan book does not include non-EU students, while the majority of EU students return to their country of domicile after graduating.

LEO enables us to know how much graduates of different courses (currently grouped by JACS) at different universities are earning now, either one, three, five, or ten years after graduating. The earliest data so far released has been 2003-04 graduates, the only cohort for which we currently have ten-year-long data. The data can also be broken down graduate characteristics including gender, ethnicity, region (at application date), age (when commencing study) and (crucially) prior school attainment. The IFS study also used student loan data to reverse engineer a measure parental income based on the amount borrowed from the SLC, but it does not appear that the government’s public release will include this.

The caveats

The region in which one lives is a significant influence on salary levels. Workers in the South East and London tend to earn higher gross salaries. Graduates of different universities tend to live in proximity to them at different rates, but on the whole, universities and courses in London, or with a high proportion of graduates residing in London, are more likely to see a higher graduate ‘premium’ that those in the North East, South West, Scotland, Wales and Northern Ireland.

This is raw, uncontrolled data. None of the data released can be interpreted as the ‘value added’ by universities because their student intakes are so diverse. Last year’s IFS study showed that one of the most significant influences on a graduates’ salary outcomes was weather they came from an already wealthy background. The raw data is also likely to show that school attainment is a stronger predictor of graduate earnings. Raw LEO data should not be seen as a measure of universities’ performance in preparing graduates for the labour market.

Furthermore, LEO is not graduate premium information, which assesses the net benefit for an individual of going to university after deducting the costs, including time spent out of the labour market while studying (lost earnings), and the costs of tuition and maintenance. Given its reliance on the student loan book for data, LEO does not yet exist for non-university qualifications such as apprenticeships, and so it will not enable us to accurately compare the benefits of taking university or non-university routes into the labour market.

The data is not comprehensive, and some institutions and subjects will have high percentages of graduates with “activity not recorded”, particularly when it comes to salaries. The explanations for this for the Department for Education include: moving out of the UK, being self-employed, and voluntarily leaving the labour force.

And finally, there’s the not-insignificant matter of time-lag. As all financial investment disclaimers say, ‘past performance is no indicator of future earnings’. Perhaps universities will have to add that to their own marketing materials in the future. Graduates’ salaries recorded at the ten years mark will have begun university in 2002/3; a lot has happened since then. In particular, the financial crisis, the subsequent recession, and a period of particularly poor wage growth will percolate through this data. Wages could get better for future generations of graduates, or they could get worse. Much depends on the future health of the economy, and so it is not appropriate to see LEO as a predictor of current university entrants’ future earnings. Alongside the time-lag, the data is not adjusted for inflation, which further complicates year-to-year comparisons.

What we know already – the experimental release

Wonkhe conducted some analysis of last year’s experimental release, which included the following:

- Universities listed by employment and further study outcomes

- Subjects of study listed by employment, further study, and salary outcomes.

- Subject of study and university listed by employment, further study, and salary outcomes, for Law only.

The release was for English universities only.

Frankly, the release told us far more about the nature of the graduate labour market than it does about universities. What has been most striking from both the IFS study (which controlled for multiple variables in graduate characteristics) and the raw experimental data from December (which did not), is that – some subjects aside – it is not how much any particular university appears to matter for many graduates’ earnings prospects, but how little.

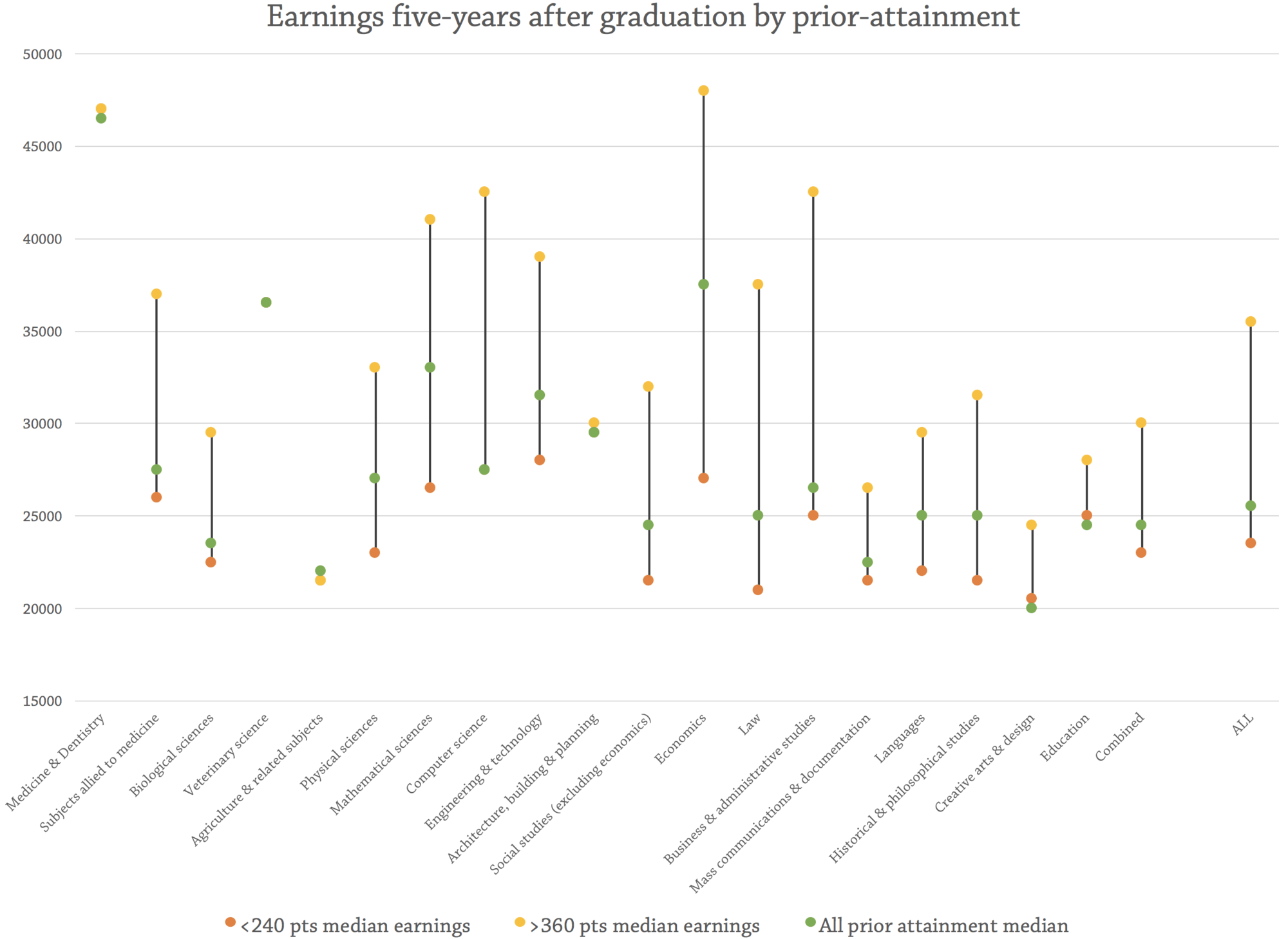

Prior attainment appears to be a significant factor in earnings attainment. Outside most of the specialist sectors such as medicine, veterinary, architecture, and education, high ‘prior attainers’ will always outperform ‘lower attainers’ regardless of the subject studied.

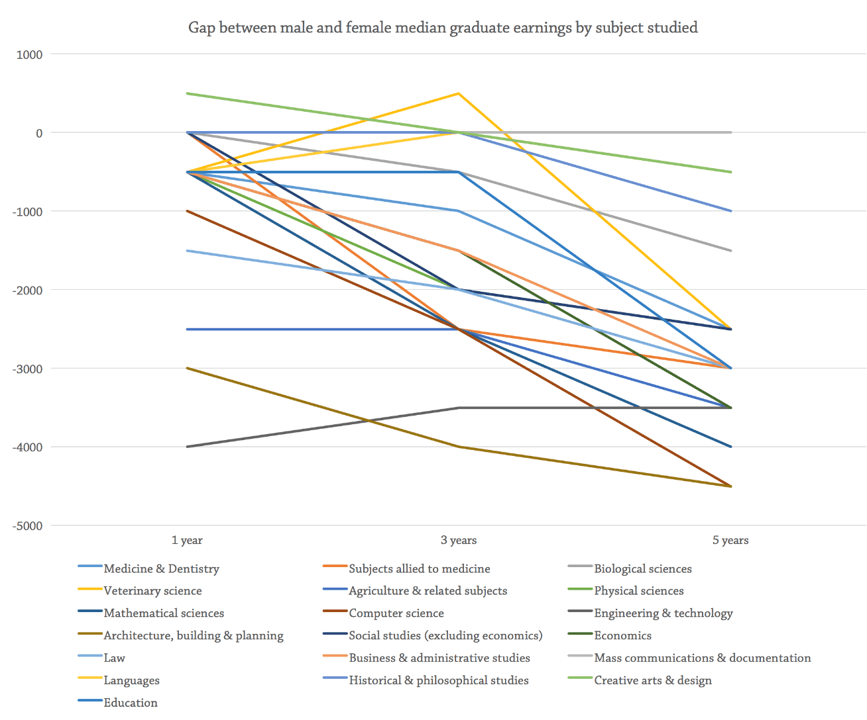

This is particularly the case for women and ethnic minorities, and dramatically so for ethnic minority women. The absolute gender pay gap and pay gaps for several ethnic minorities, do not build up over time, but appear instantly when graduates enter the labour market, again regardless of subject studied. These gender and racial pay gaps also get worse the further into their careers that graduates embark. The pay gaps between white men and non-white women are particularly striking.

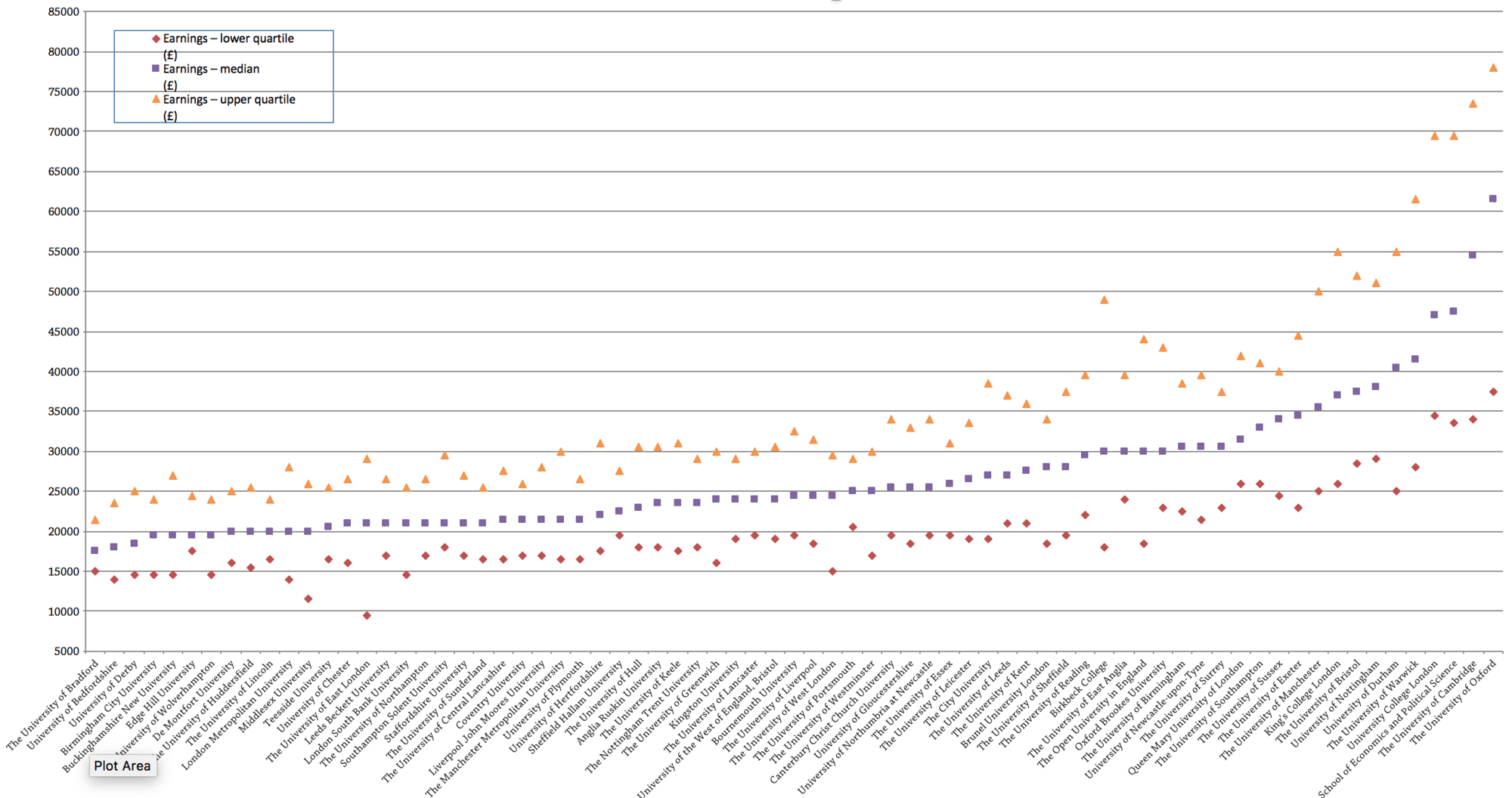

Finally, Law proved to be an interesting choice for testing the subject and university release. The data shows that there is tough competition for the top salaried law jobs and that the graduates most likely to attain these heights graduated from a very small number of elite, highly-selective universities. There is a ‘long-tail’ of earnings outcomes for law graduates from most universities, many even in relatively selective institutions such as Leicester, Liverpool, or Lancaster. Get set for a similar graph for twenty different university subjects come June 13th.

However, the median earnings of law graduates at less selective universities is still on par with the national median wage, suggesting that studying law anywhere has still been a good bet if you want to earn an average wage. The ‘graduate premium’ may, after deducting for fees, inflation, and years out the workforce, may not quite hold-up, but this has to be weighted on a ‘risk-reward’ choice for prospective students. Studying law at a post-92 institution, even if you’ve got next to no chance of becoming a high-end barrister or judge, can still pay off compared to not going to university at all.

Who loves LEO?

The government’s determination to understand more about the employment and salary outcomes of different educational pathways comes from a very particular strand of ‘variable human capital investment’ thinking in the Treasury. Student loans expert Andrew McGettigan has written in some depth about the Treasury’s view of higher education and its relationship to the economy. McGettigan argues that “the focus of policy has been the transformation of higher education into the private good of training and the positional good of opportunity, where the returns on both are higher earnings”.

Such a view echoes the godfather of modern free market economics, Milton Friedman, who wrote the following in ‘The Role of Government in Education’ in 1955:

“[Education is] a form of investment in human capital precisely analogous to investment in machinery, buildings, or other forms of non-human capital. Its function is to raise the economic productivity of the human being. If it does so, the individual is rewarded in a free enterprise society by receiving a higher return for his services.”

We might compare this with the government’s own guidance on the SBEE Act in 2015:

“A specific benefit will be that new statistics on outcomes will be made available to help students make more effective course choices. It is not possible to quantify these benefits, but more informed student choice would ensure the education system is more accountable and reactive to its economic value.”

Or with for universities minister David Willetts, speaking back in 2012:

“Imagine that in the future we discover that the RAB charge [loan non-repayment rate] for a Bristol graduate was 10%. Maybe some other university … we are only going to get 60% back. Going beyond that it becomes an interesting question, to what extent you can incentivise universities to lower their own RAB charges.”

At present, the Treasury continues to underwrite a significant proportion of student loan repayments under the terms of income contingent loans. LEO’s power is that it can be used to identify the long-run individual economic returns to higher education, which can in turn be used to regulate (read: minimise) levels of state subsidy.

There has been growing suspicion in recent years about the problem of ‘over education’ and graduates in non-graduate occupations, with the implicit suspicion that not all forms of higher education are equally of value (neither culturally nor economically). Just think of the regular complaints of ‘mickey mouse degrees’ and ‘too many people going to university’. The cultural complaint about the relative merits of studying certain courses at certain universities will likely align with what LEO will show us about salary returns.

Those in higher education often baulk at this hyper-utilitarian and instrumentalist assessment of the value of going to university. The challenge in making this argument cut through to the hard-headed number crunchers at the Treasury will only increase after LEO is released.

Policy implications of LEO

LEO is commercial and political dynamite, and could fundamentally alter the market viability of certain university courses. DfE is determined that it cannot be leaked pre-release. Universities will only have access to their own data before it goes public.

A lot will, of course, depend on how the data is reported. Though not official public information on higher education (and so not on Unistats or UCAS), there will no doubt be a lot of media coverage. Newspapers may choose to incorporate it into league tables.

Then there’s last year’s White Paper:

“The extensive coverage of this dataset – called the Longitudinal Education Outcomes (LEO) dataset – will make this data a valuable source of information for prospective students to have a better picture of the labour market returns likely to result from different institution and course choices. LEO data will support our quality assessment processes for higher education providers including TEF awards and it will enable better benchmarking of institutions against their peers.”

Quite how LEO might align with TEF is very unclear. If it were incorporated as outcomes and salary data into this year’s exercise in place of the two DLHE metrics, it would make for only a third of a TEF outcome. To do so under the current system would also require some kind of ‘benchmarking’ of LEO, which would no doubt produce some very very interesting results. Oxford may simply not be rewarded for its law graduates earning six figure salaries if those graduates all have parents who earned the same…

A possible plus side of LEO for those of us more interested in the more Elysisan or intrinsic benefits of higher education is that its introduction has been the catalyst for a fundamental review of the DLHE survey, which will in future include a much richer range of data on graduates’ experiences, outlooks, hopes and aspirations. LEO may tell us that the top law graduates from Oxford are earning nearly £80,000 by the time they are 26, but new DLHE might tell us that they are also miserable.

For two decades now, higher education’s rapid expansion has been based upon two premises asserted by government policy makers. Firstly, that higher education continues to return a healthy ‘graduate premium’ for individuals. And secondly, that the economy is in demand of greater and greater numbers of higher level skills. This line of thought has not been without its critics, such as Baroness Alison Wolf, but has generally been welcomed by the university sector that has been happy to keep expanding. LEO could undermine both of these assumptions, by showing the variance in graduate premiums across institutions and courses, as well as highlighting instances where graduates are earning less than their non-graduate peers.

This challenge to universities is rather fundamental. How exactly the government will respond is difficult to predict, particularly following last week’s election results. If a Labour government led by Jeremy Corbyn were to be elected in the near future, LEO could perhaps be even more powerful if a cap on student numbers were to be introduced as a means to abolish tuition fees.

The possible political uses of LEO are many and varied. As I’ve written before on Wonkhe: the only way to stop a bad guy with data, is a good guy with data. Or if you prefer: data doesn’t create bad policy; people create bad policy. Let’s hope that LEO can help us create better policy, however badly it gets spun when it is released tomorrow.

There has been a release of experimental LEO data for apprenticeships https://www.gov.uk/government/statistics/average-earnings-post-apprenticeship-2010-to-2015 , though as yet barely any Higher Apprenticeships made the cut for obvious reasons.

Comparisons to degree data are always going to be dangerous given the different starting points of the individuals in the data.

[…] three of these make significant use of Longitudinal Education Outcomes (LEO) data – which links HM Revenue and Customs income data to student records to capture median salary at […]