After a long wait, the Office for Students (OfS) has published the findings of two (out of eight) of its new quality “assessment visits” focused on Business and Management courses at two universities.

They set out the advice of its newly recruited independent academic experts, who carried out assessments for OfS during the 2022-23 academic year.

These are the fabled “boots on the ground” exercises – so as well as a whole heap of desk evidence, the teams undertook on-site visits to chat to students, staff and managers to assess the provision against OfS’ B conditions.

At London South Bank University, the assessment team found no areas of concern. But at the University of Bolton, the assessment team did find areas of concern.

In Bolton the assessment team found that academic staff resource “could be overly stretched”, it had concerns about “support for avoiding potential academic misconduct and the provision of formative feedback”, and found that academic support for foundation students once on the main programme “was not sufficient”.

There’s no regulatory action yet – OfS will now “carefully consider the findings” as it decides whether any further regulatory action is appropriate.

In some ways, the two reports are terrific – a decent external deep dive into issues that aren’t just a hobby horse for politicians but ought to be a real concern for universities, their staff and students on the programmes.

As with the previous thematic report on blended learning, they go some way to explaining how OfS’ definition(s) of quality will apply in practice.

But they do raise some interesting questions about OfS’ areas of focus, its selection of providers to be assessed, its supposed risk-based approach, the pace and breadth of its activities and OfS’ relationship to internal quality processes.

London South Bank VC Dave Phoenix has a great blog up on HEPI reflecting on the process there – here we focus in on the Bolton report.

Using its intelligence

As a brief reminder, the idea here is that every year OfS will select a number of providers for investigation based on regulatory intelligence – including, but not limited to, student outcome and experience data and relevant notifications from students and others. As part of this process, OfS may commission an assessment team, including external academic experts (but notably not including students) to undertake an assessment of quality against its conditions of registration – which these days differ from and are more granular than the QAA Quality Code.

Having consulted for a long time on the approach, it first announced this round of business and management deep dives back in May 2022 – when a press release that originally announced it was launching “eight investigations into poor quality courses” got mysteriously edited into the less conclusive headline of “OfS opens investigations into quality of higher education courses”.

This followed a series of ministerial nudges to crack on with its work on “low quality” provision, enabling the universities minister of the day to proclaim that she was putting “boots on the ground” – emphasising (as is traditional) the threats of fines of up to half a million pounds or two per cent of turnover, and loss of access to the loan book if improvement wasn’t delivered.

The precise recipe for determining that it was business and management that should go first, and the sub-recipe for selecting the lucky eight has never been disclosed – there’s only a range of vague criteria both in the ministerial guidance letters and OfS documentation, which perhaps explains why until now we’ve not officially known who any of the eight were and why that press release got edited.

And the scope of the deep dive is carefully drawn too. In Bolton’s case, the OfS appointed team assessed the quality of the business and management courses provided by the university – excluding courses delivered by partner organisations and transnational education).

We’re not told whether that’s because its regulatory intelligence (including, but not limited to, student outcome and experience data and relevant notifications) suggested no issues there, or for other reasons relating to the team’s size, capacity, or ability to get into those partners.

It’s also notable that the report represents the conclusions of the team as a result of its consideration of information gathered during the course of the assessment to 6 January 2023. You do wonder what on earth has been going on in the eight months since, and why this particular launch date (coinciding, as it nearly does, with the launch of a House of Lords Industry and Regulators Committee report into the work of the OfS) was chosen.

Narrowing the focus

Set all of that aside, and what we have here is effectively one of the longest external examiner reports I’ve ever read. There’s a description of the size and shape of provision, a run through of the university’s approach to supporting students, some detail on how the team went about its work, and then the team’s formal assessment of matters relating to quality under ongoing conditions of registration B1, B2 and B4 – academic experience, resources, support and student engagement and assessment and awards.

Interestingly, early on the assessment team says that it decided to focus on undergraduate provision – “differential student outcomes data and cohort sizes” meant that this was, in the assessment team’s view, in line with a risk-based approach. It bases that on things like the completion rate for full-time first degree students over four years being 65.2 per cent, compared with a completion rate of 92.6 per cent for full-time postgraduate taught masters students over the same period and same subject area.

At each stage of narrowing both from OfS and its assessment team, the signal is: poor outcomes means we’ll interrogate the provision. It’s not clear whether the team had a moan to OfS that it couldn’t use student satisfaction data because OfS has never gotten around to developing a survey for PGTs.

The team than applied some further narrowing – using that “risk-based approach” thing again, we’re informed that the team focused three specific undergraduate courses because they represent “a significant majority” of undergraduate students in Bolton’s Institute of Management:

- BSc (Hons) Business Management (and associated pathway degrees)

- BSc (Hons) Business Management with foundation year

- BA (Hons) Accountancy

The section on B1 is pretty short – a desk review of documentation obtained both prior to the visits and while in situ, coupled with discussion with staff, students and even the Industry Advisory Board for the Institute of Management led it to “not identify any concerns” with B1 concerns like courses being “up-to-date”, providing “educational challenge”, “coherence”, “effectively delivery” or the development in students of students to develop “relevant skills”. But B2 – resources, support and student engagement – is another story.

Stretched

The headline here is really about capacity. At the time of visiting the university had evidently introduced some new schemes – “LEAP Online” is a digital resource that aims to support students’ academic and personal development”, “LEAP Live” is a wide-ranging programme of in-person and online sessions covering academic and study skills as well as wellbeing topics, and “LEAP Forward” is the university’s personal academic tutoring (PAT) system, in operation since 2015.

There’s also a system for monitoring student interactions and engagement called “PULSE” – designed to support those PATs in monitoring and engaging with their tutees, and a recently created Student Success Zone (SSZ) in the school where students can seek support with digital literacy, academic systems, or academic writing.

The team has no argument with all of that – albeit that some aspects were so new as to not yet be capable of being effectively evaluated (either by the university or by the team). What it did have a problem with is something much more basic and fundamental – the number of academic staff around to support students.

This is partly about context. To reach a view on the sufficiency of academic staff resource and academic support (a key concern of Condition B2.2.a), the assessment team sought to understand the context of the undergraduate student cohort – noting that B2 expects there to be “significant weight” placed on the particular academic needs of each cohort of students based on prior academic attainment and capability, and the principle that the greater the academic needs of the cohort of students, the “number and nature of the steps needed to be taken are likely to be more significant”.

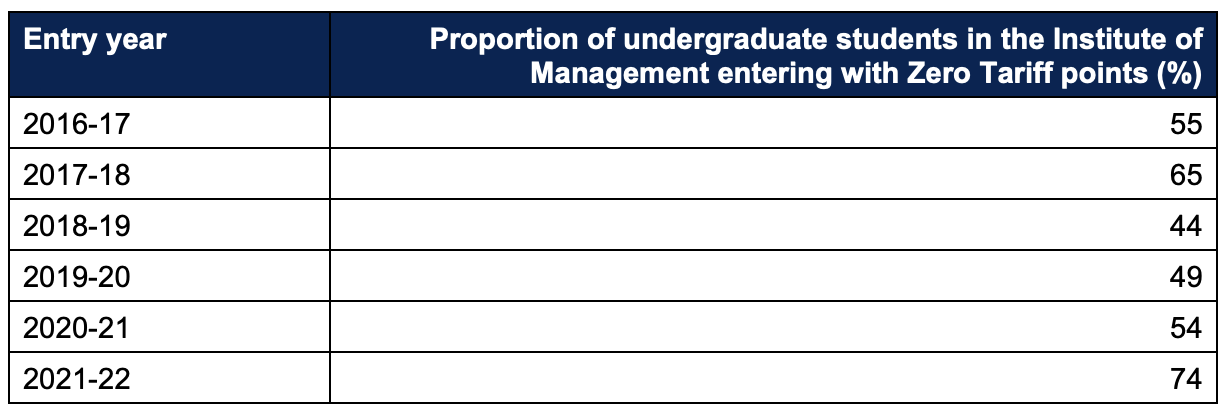

On that it noted, for example, that the proportion of undergraduate students joining the Institute of Management with a “Zero Tariff” entry profile has, in four of the last six years, been a majority of the student intake:

(This includes the “non-standard entry” route, as well as students that have other entry qualifications that do not carry UCAS points, such as apprenticeships and foundation years at other providers.)

It also noted that OfS data showed the proportion of students entering business and management undergraduate degrees at the university aggregated over a four-year period with A-levels at grade CDD or higher was 1.5 per cent – and those with BTECs at grade DDM or higher, or one A-level and two BTECs, was 2 per cent.

These are, of course, the sorts of student that many politicians would argue might be “better off” doing something more vocational. OfS’ buffer position is that universities should widen participation – but only if they can they support them to get good outcomes. And B2 is therefore central to delivering B3.

Part of the difficulty in doing that, of course, is that the external context – particularly over recent times – has been anything but stable, especially for students at the sharper end of the socioeconomics. The team notes staff commentary that a high proportion of students “balance their studies with significant levels of paid work and/or caring responsibilities”, with some staff noting that “the main challenges in [students] completing” are “family commitments” or trying to balance “full-time hours of employment and studying”

The question for that team therefore then becomes – well is the problem on the student side of the partnership (even if it’s not their fault), or is there more the university could have done?

It’s the ratio, stupid

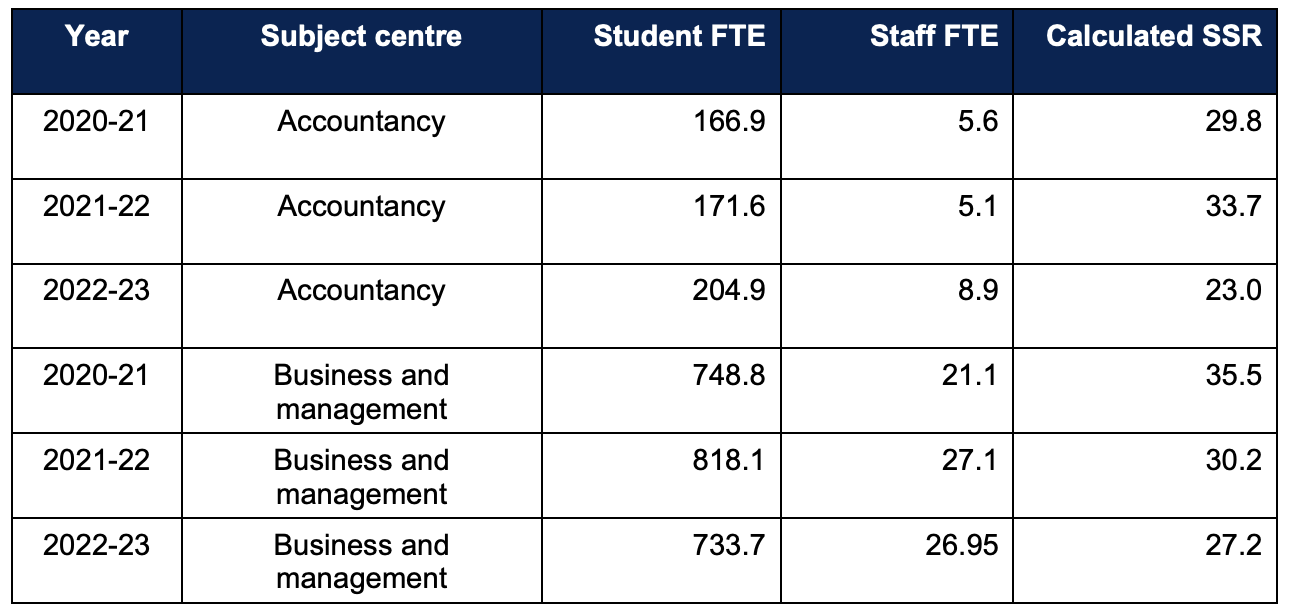

First in the firing line is the staff-student ratio – buffeted by senior staff commentary that academic staff resource “had not been, and was not at” the “target level”. Senior leaders said that there had been “challenges in recruitment”, but that the university was “investing in staff” meaning that the “staff-student ratio has peaked” and was expected to fall.

As well as the numbers, the team also noted that a high proportion of academic staff were studying for postgraduate (primarily doctoral) qualifications – a positive in principle, but also a high proportion of staff with study commitments representing “additional challenges” to levels of academic staff resource available for student support.

Crucially, the assessment team identified areas of academic support that seemed to have been affected by “stretched” academic staff resource – including on marking times, the personal academic tutoring system and assessment tutorials. Some students described not always having feedback in advance of their next assessment on a module, few students reported having met with their PAT formally to discuss their progress (in accordance with the stated policy) and the assessment team noted that advice on the delivery of academic support in groups rather than one-to-one sessions “seemed to have been given due to resource capacity rather than pedagogical choice”.

That all led to the assessment team’s view that the “stretch” in academic staff resource meant that it was not sufficient to ensure a consistent delivery of academic support, through the examples discussed, including as outlined by university policies.

There is, of course, a sense here that the risks that need to be managed are those caused directly by the government – the dwindling value of the unit of resource. If fees for this course were funded at the same real-terms level it was in 2014, there may not have been a staff shortage. OfS has, perhaps, learned the limitations of trying to do more with less.

So what?

That’s arguably the most substantial concern in the report – although there are three more. The team found inconsistent implementation of the preventative and support processes outlined in the university’s Academic Misconduct Policy, arguing that consistent signposting to specific academic support at Level 4 would help students to avoid academic misconduct at later stages.

It also found that the availability and accessibility of academic support via formative feedback was not currently sufficient for all students, and concluded that the levels of academic support to meet the needs of progressed foundation year students were not sufficient. It did not find any concerns relating to assessment and awards.

It’s also worth saying that many of the issues identified are also identified as being on the way to be addressed by the university – and may well already have been given the time since the visit(s).

So what have we learned? As I suggested in the intro, if you’re trying to work out how OfS and its new assessors might interpret the B Conditions, this is all pretty helpful – giving real food for thought to those involved in internal quality processes on the sorts of things that should be looked at and the questions that ought to be asked.

It’s notable, I think that the Quality Assurance Agency’s latest salvo in the Quality Wars – a definition of “quality” for policymakers and other lay stakeholders – is fairly well supported here, and arguably assessed more vividly than we ever saw in QAA reports of old:

Quality in higher education refers to how well providers support students consistently to achieve positive outcomes in learning, personal development and career advancement, while meeting the reasonable expectations of those students, employers, government and society in general.

Back in 2021 OfS CEO Susan Lapwoth argued that some of this work might act as useful signalling. It’s not so much that these two providers (or indeed the eight they’re in a group with) have been singled out – it’s that when for whatever reason OfS does a deep dive like this, it can help others to reach the necessary quality threshold. There’s a truth in that.

I both would and should say that what would really help would be a way of compiling this sort of stuff into something more digestible for staff and students. I was thinking as a I read it “how will I explain all of this to our subscriber SUs” – it’s notable that NSS results were generally pretty positive in the report, but as I keep saying – if you want students to feed back (either through reps or in surveys) on the B2 descriptions of quality, you do have to take steps to tell them they exist, and explain how they’ll work in practice.

That’s true for staff and external examiners too – and is still a gaping hole in the regulatory design if you want providers to identify poor quality themselves.

To some extent, the findings ought to strike fear into the hearts of all sorts of departments in all sorts of providers. If the combo of staff trade union commentary on being stretched, what SU officers of all stripes have told me about their own academic experience this summer, and a worsening external context for enabling student commitment and engagement are all true, the judgements here feel like judgements that could be made right across the sector – and probably should be.

The real problems here, though, are about that risk-based approach.

Samples!

Lost in time is OfS’ original intent to use a “random sampling” approach to identify a small proportion of providers each year for a more extensive assessment of whether they continue to meet the general ongoing conditions of registration. The idea was that it would be used to confirm the effectiveness of OfS’s monitoring system, and act as a further incentive for providers to meet their ongoing conditions of registration.

That was dropped on government instruction in the context of saving money – only to be replaced by a confusing, contested (and for all we know just as expensive) approach which seems to combine targeting the worst outcomes in easier to access cohorts in bigger subjects in bigger universities.

You can make an argument – as OfS does – that doing so is entirely sensible, that it would be daft to do deep diving into provision that shows no signs of being as problem. But given the potential external signalling problems in being selected, and the wedge of work it generates when you are selected, the sector will argue that the alternative leaves it open to challenge – especially when, of the first two it’s got around to publishing, one results in a clean bill of health.

Those concerns make sense – and I suspect have driven the slow pace of publication here, which is in and of itself a problem. But more importantly, it does mean that students on provision that resembles Bolton’s yet doesn’t fit those risk-based criteria might feel unprotected – on the basis that plenty of motorists only used to slow down if they thought there was film in the speed camera.

You can fix that in a number of ways. Random sampling really should return as an approach. You can be much more explicit with students about what’s in those Conditions on the outputs side, setting out the way in which they can raise concerns outside of woolly “quality enhancement” processes that tend to regard addressing their feedback as an optional extra rather than a must.

You can also expect SUs to assess the university against the Conditions every year, offering clear protection if they say something uncomfortable – something we were close to in the old QAA process, but some distance from in the recent TEF student submission exercise. When you survey students, you can not only more clearly link that survey to the outputs in B2, you can also tell students what you’re going to ask them at the end, at the start – taking extra steps to ensure that postgraduates and those on TNE and franchise provision know what they should expect.

And to be fair here, the review teams really should spend more time considering that external context – especially if outcomes deteriorate after Year One. That is, unless OfS thinks that providers should be able to somehow predict cost of living crises, atrocious atrophying student financial support and nonchalance to the challenges that all provides to students and staff.

Without that kind of approach, we’re doomed to a regulatory regime that will likely only spot a problem on large courses in the universities doing the real heavy lifting on the A(ccess and Participation) Conditions.

And as we know from NSS questions 10-14, effective assessment has to both help you learn, be objectively fair, and crucially feel fair too.

no; they are not fit for purpose and a rethink is absolutely critical. A clear out of upper echelons, reduced costs and a recognition that they don’t have a strong enough track record in quality or building critical but effective relationships with anyone but themselves. Time to press the rest button, as some once said

The OfS is woefully inadequate in regulating the sector and is a misuse of tax payers money. They should be abolished and a new fit for purpose body set up with people knowledgeable in the sector

When you start telling people how to teach something, by definition you change what is taught. This is the first step towards a national curriculum in universities, where education is presented as a series of bite-size, pre-processed chunks to be memorised rather than a dynamic exploration of oneself, one’s values and one’s place in the world. A bland offering designed to appease conservative regulators, steeped in the ideology of the free market.