We covered this particular ranking last nearly three years ago. URAP, the University Ranking by Academic Performance, is run from the Informatics Institute at the Middle East Technical University in in Turkey and was established in 2009.

URAP team members are METU researchers who voluntarily participate in URAP Research Lab as public service. The main objective of URAP is to develop a ranking system for the world universities based on academic performance indicators that reflect the quality and the quantity of their scholarly publications. In line with this objective URAP has been annually releasing the World Ranking of Higher Education Institutions since 2010, and Field Rankings since 2011. The most recent rankings include 2500 HEIs around the World as well as 41 different specialized subject areas.

It’s not unfair to suggest that things have not really moved on a lot since 2009. The methodology is pretty similar, although some of the data sources have changed, and the aim remains to be more inclusive than the other rankings. Here’s the URAP rationale:

As globalization drives rapid change in all aspects of research & development, international competition and collaboration have become high priority items on the agenda of most universities around the world. In this climate of competition and collaboration, ranking universities in terms of their performance has become a widely popular and debated research area. All universities need to know where they stand among other universities in the world in order to evaluate their current academic performance and to develop strategic plans that can help them strengthen and sustain their progress. In an effort to address this need, several ranking systems have been proposed since 2003, including ARWU-Jiao Tong (China), THE (United Kingdom), Leiden (The Netherlands), QS (United Kingdom), Webometrics (Spain), HEEACT/NTU (Taiwan) and SciMago (Spain) which rank universities worldwide based on various indicators.

The use of bibliometric data obtained from widely known and credible information resources such as Web of Science, Scopus, and Google Scholar has contributed to the objectivity of these ranking systems. Nevertheless, most ranking systems cover up to top 700-1000 universities around the world, which mostly represents institutions located in developed countries. Universities from other countries around the world also deserve and need to know where they stand among other institutions at global and national levels. This motivated us to develop a multi-criteria ranking system that is more comprehensive in coverage, so that more universities will have a chance to observe the state of their academic progress.

Despite being an actual ranking, the ostensible goal of the URAP ranking system is apparently NOT to label world universities as best or worst. Rather, ranking universities from top to bottom in their list is intended “to help universities identify potential areas of progress with respect to specific academic performance indicators”. Rightly, URAP’s authors acknowledge that, as with other ranking systems, it is “neither exhaustive nor definitive” and they are always open to new ideas and constructive feedback. Still, they seem to have struggled to make a dent in the popularity of the other rankings they mention.

Method in the madness

Let’s have a closer look at the methodology then:

The URAP ranking system’s focus is on academic quality. URAP has gathered data about 3,000 Higher Education Institutes (HEI) in an effort to rank these organizations by their academic performance. The overall score of each HEI is based upon its performance over several indicators which are described in the Ranking Indicators section. The ranking includes HEIs except for governmental academic institutions, e.g. the Chinese Academy of Science and the Russian Academy of Science, etc. Data for 3,000 HEIs have been processed and top 2,500 of them are scored. Thus, URAP covers approximately 12% of all HEIs in the world, which makes it one of the most comprehensive university ranking systems in the world.

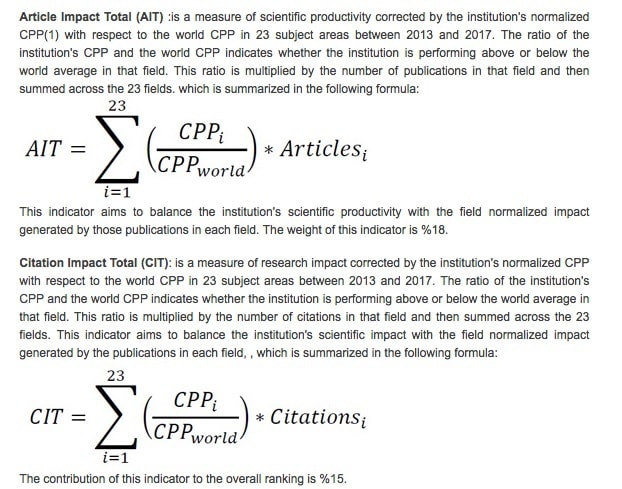

URAP ranking system is completely based on objective data obtained from reliable open sources. The system ranks the universities according to multiple criteria. Most of the currently available ranking systems are both size and subject dependent. The AIT and CIT indicators aim to minimize the influence of differences among publication trends across disciplines by providing subject level adjustment. As of 2017, URAP employed a new filter for the article and citation indicators to promote publication quality by focusing on articles published in journals that are listed within the first, second and third quartiles in terms of their Journal Impact Factor within their respective subject areas. The URAP research team is continuously working on new methods to improve the existing indicators.

So the key indicators are these – the ranking is very much citations driven:

And, just to demonstrate how serious this all is, they have difficult formulae to arrive at the results:

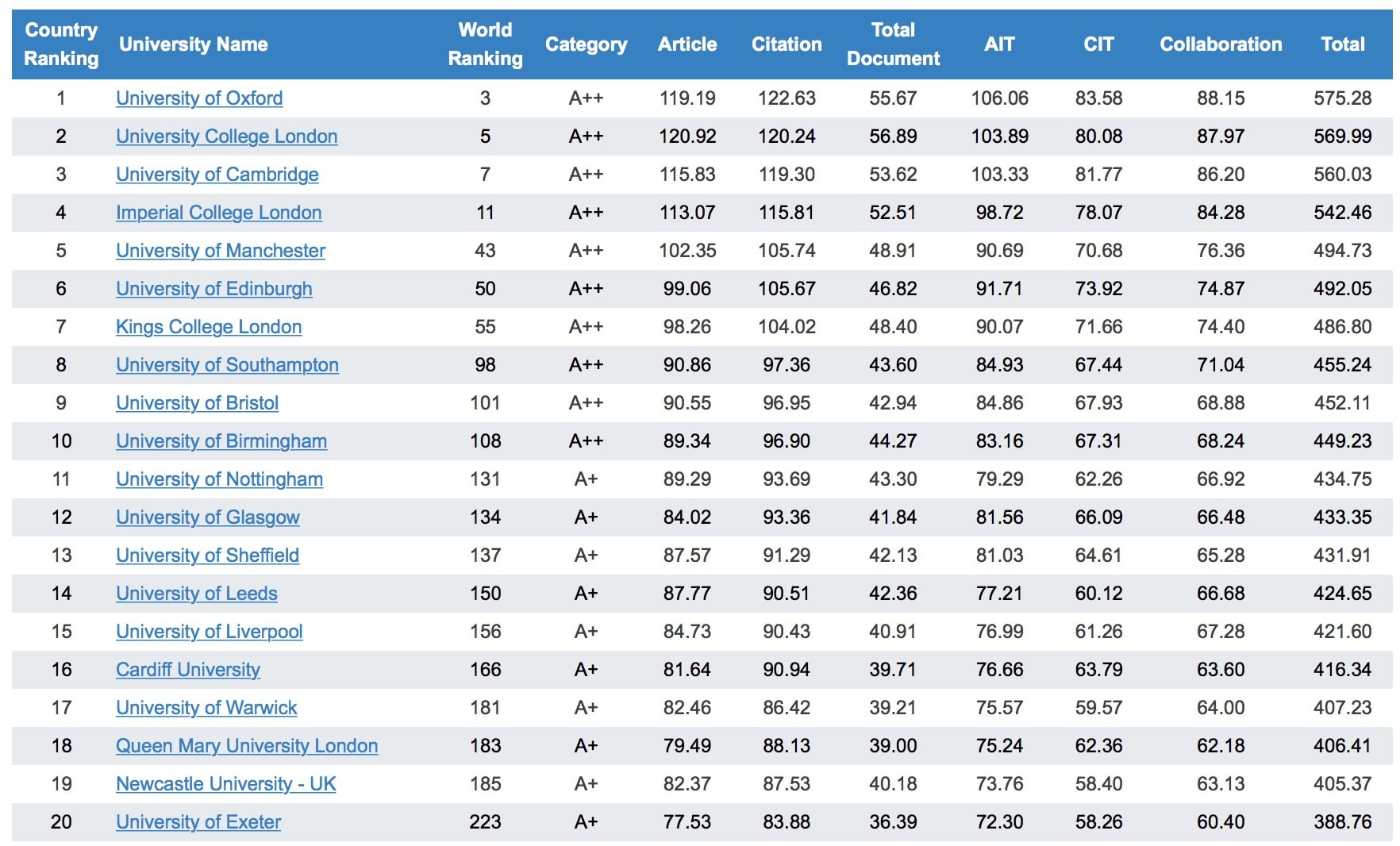

You can find the full ranking here but this is the UK top 20. It hasn’t changed much since the last time:

URAP is still yet to have a real impact, it’s not going to have many university vice-chancellors and presidents bragging about their performance in it, despite the unique selling point of the gold edged certificate for the executive office wall.