Over the last few weeks, universities have been presented with their data and the ‘flags’ – the plusses and minuses, and double plusses and double minuses – which show how well they’re doing relative to a benchmark of performance in the TEF. As the metrics and associated flags drive the TEF outcomes, these are important to understanding how the exercise will operate… and the likely results.

A little recap

Central to the Teaching Excellence Framework is the use of metrics to establish how well higher education providers perform. The metrics data – ideally the previous three years (but less if there’s less information available) – is then benchmarked based on the characteristics of the students at the provider, including factors such as age, ethnicity and subject of study. Each provider has a unique benchmark based on its students’ characteristics.

The result is a system of flags which are created, to use HEFCE’s description: “Once the core and split metrics are calculated and benchmarked, those results that are significantly and materially different from benchmark are highlighted. This is referred to as flagging.”

The six ‘core’ metrics that will weigh most heavily on institutions’ TEF results are:

- Teaching on my course (NSS)

- Assessment and feedback (NSS)

- Academic support (NSS)

- Non-continuation (HESA and ILR data)

- Employment or further study (DLHE)

- Highly skilled-employment or further study (DLHE)

As well as the headline core metrics there are ‘splits’, which look at variations in each of the core areas by (amongst others) gender, ethnicity, age and disability. The aim of the split metrics is to establish how students from different backgrounds fare on the various measures relative to their peers. TEF is aiming to incentivise institutions to look seriously at – and address – inequity amongst different student groups; highlighting differences in this way will show where the work needs to be done, and where good practice can be celebrated.

What are flags, and why do they matter?

The flags will show the extent to which the provider is being more or less successful relative to the sector, and are central to the decision-making process for the TEF panel.

Institutions’ metrics that are both at least ±2 percentage points and ±2 standard deviations away from the benchmark will be flagged + (positive) or – (negative). Metrics that are both at least at least ±3 percentage points and ±3 standard deviations away from the benchmark will be flagged ++ or –. There has been a minor amendment to the rules in cases where the percentage point variance wouldn’t otherwise result in a flag, but this is the essence of how the system will work.

The benchmarking process doesn’t reward absolute performance, however, so it’s entirely possible to get a positive flag for retention, say, at 80% if you’re outperforming your peers while a university with 90% retention could get a negative flag if, based on their student intake, they’re below par. While this gives some particular institutions cause for concern, those sorts of absolute measures are already happily in the public domain (see pretty much any domestic league table). Where TEF differ is in providing new information for a wide public audience on how universities perform for the students they admit.

From flags to judgements

The guidance states that, for the core metrics:

- A provider with three or more positive flags (either + or ++) and no negative flags (either – or – – ) should be considered initially as Gold.

- A provider with two or more negative flags should be considered initially as Bronze, regardless of the number of positive flags. Given the focus of the TEF on excellence above the baseline, it would not be reasonable to assign an initial rating above Bronze to a provider that is below benchmark in two or more areas.

- All other providers, including those with no flags at all, should be considered initially as Silver.

The six core metrics’ flags will count the most towards the TEF panel’s ‘initial hypothesis’. However, we are told that “the initial hypothesis will also take account of performance based on split metrics.” While both core and split metrics will be flagged, it appears that the panel will have more flexibility when considering the impact split flags. The splits will be most interesting when they contradict the core flag in either direction.

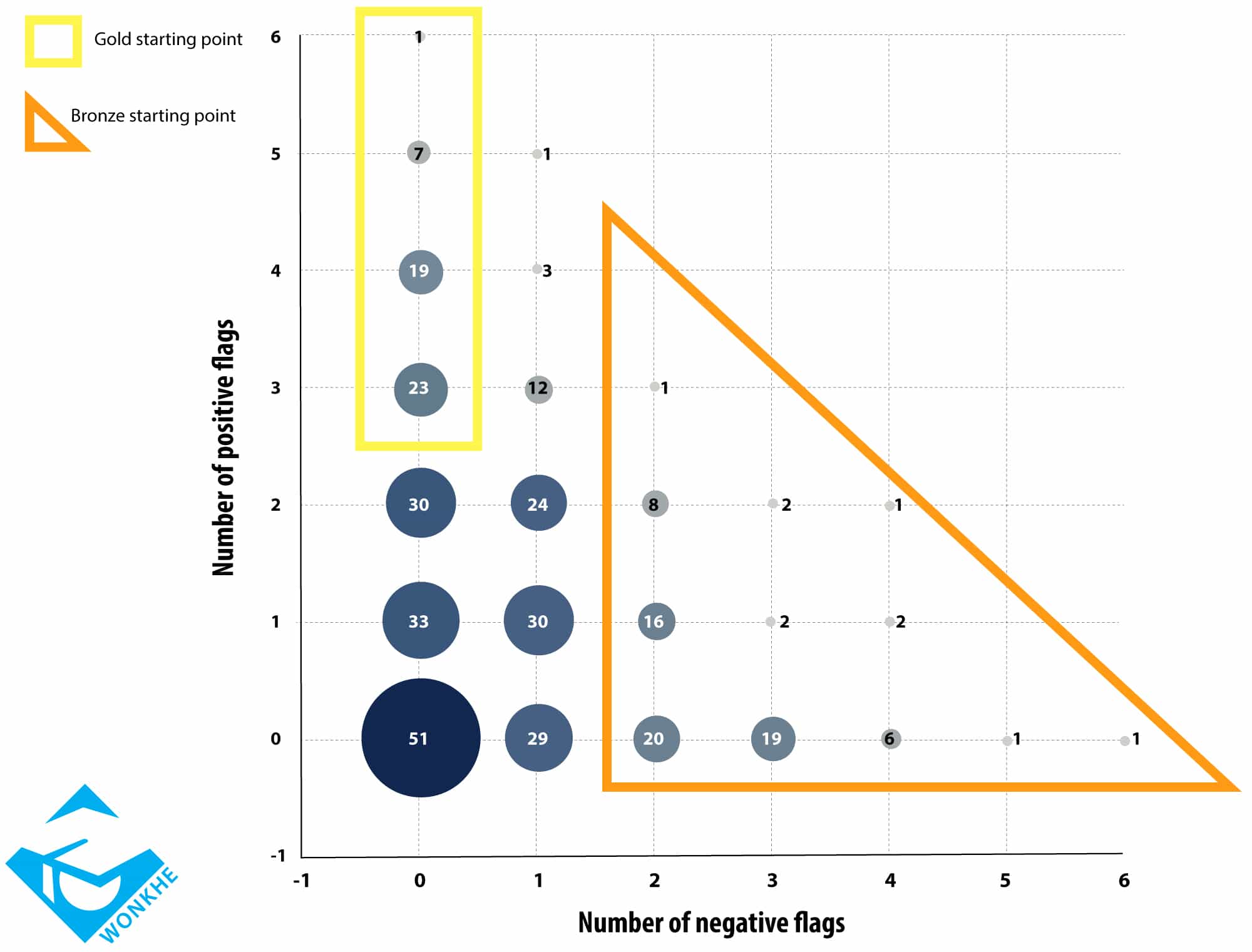

The below diagram was extrapolated from a presentation given recently by the TEF team to universities about what the TEF data tells us. It shows the distribution of flags across the sector, and the point at which different awards begin, based on the core metrics and full-time provision

There is also a provider submission that is reviewed alongside the metrics and flags where more evidence can be supplied to explain away issues or make a case for a higher rating. However, the guidance states, with original emphasis:

“The likelihood of the initial hypotheses being maintained after the additional evidence in the provider submission is considered will increase commensurately with the number of positive or negative flags on core metrics. That is, the more clear-cut performance is against the core metrics, the less likely it is that the initial hypothesis will change in either direction in light of the further evidence.”

Be warned then: there’s some scope at the margins for movement up and down, but where the flagging system – with an emphasis on the core metrics – puts a provider deep inside one of the three outcome categories, that’s where it’s staying. The guidance is not explicit about the significance that a ‘double positive’ or ‘double negative’ flag could have, but presumably these will be given particularly close attention by the judgement panel.

That said… the guidance also allows for some additional wiggle room: assessors are invited to give less weight to the NSS-driven metrics and more to retention and employment. And so we’re left without certainty on the extent to which the TEF panel will apply the metrics/flags-based approach and the latitude to make more subjective decisions. One reading is to say that the metrics will always determine the initial hypothesis, and that will stand unless the panel is swayed by significant evidence to the contrary. But another reading suggests that there will be significantly more deviation from the prescribed rules with a range of get-out options of the panel. Chris Husbands, the TEF panel’s chair, has written for Wonkhe addressing the provider submissions and the role or the panel.

Results day

As the diagram above shows, the initial TEF flag results – that is, just on the basis of the benchmarked flagging system for core metrics, without the provider submissions – seem to show 50 institutions in presumed Gold, 213 in presumed Silver and 79 in presumed Bronze. This is roughly in line with the expectations from DfE: Gold was expected to be around 20% but is currently at 15%; Silver expected to be 50-60% but is at 62%; Bronze the remaining 20-30%, now at 23%. It also gives some scope for grade inflation as the Silver institutions with more positive flags make the case as strongly as possible to tip over the line to Gold.

An institution with six negative flags in the core metrics (a full sweep) will be graded Bronze, but so will an institution with four positive flags and only two negative ones. Presumably alarm bells will be ringing somewhere for those institutions with three or more negative flags and no positive ones, but TEF will be happy to award them Bronze in any case. Some graduates who narrowly missed first-class degrees and had to share a 2:1 with those on significantly lower marks might be feeling some schadenfreude that the sector is being graded in a similar way.

An institution with five or six positive flags will share Gold with institutions with ‘only’ three positive flags. Perhaps a ‘Platinum’ award can be given to the single institution with six positive flags – make your own guesses as to who this might be. Critically, because of the nature of the benchmarking, the vast majority of institutions will have no, or only one, positive or negative flag, leaving them safely within Silver territory; universities’ own ‘solid 2:1’. We’ll have to wait until next May to read the final class lists, where unlike at a certain ancient institution, there is no doubt that there’ll be a full and very public publishing.

This is satire, isn’t it?Please tell me it is… please….

I prefer Dr Sheldon Cooper’s version