Avid Wonkhe readers will recall that the Office for Students (OfS) business plan contains references to the development of a national survey into the views of postgraduate students.

In fact, the survey has been well signalled – OfS’ own director of external relations Conor Ryan wrote about the plans on the site in October 2018. Back then we noticed with interest that as well as capturing the usual satisfaction with the quality of teaching, they would also use the survey to look at aspects of students wider experience and students’ perceptions of value for money.

Since then things have gone quiet, until institutional exhortations started to pop up to complete an OfS postgraduate survey on social media almost as soon as NSS 2019 formally closed. University webpages say that “summary results of the survey will be shared with your university or college, enabling them to identify strengths and weaknesses in their current postgraduate taught offer”, which is interesting because the Conor Ryan piece said that this first stage work would not be published in a way that identifies responses at provider level. Not published externally, anyway.

Pilot license

Officially, OfS says that the pilot takes a similar approach to the National Student Survey (NSS) of undergraduate students (it certainly uses the same Likert scale) and it is to use the findings to understand the priorities of taught postgraduate students, field-test survey questions, assess the long-term feasibility of launching a survey nationwide, and contribute to the future development of a regular PGT experience survey that is relevant to PGT students across England.

The first trial has been opt-in, and it seems that more than 60 universities and colleges have taken part. Of particular interest to us is what is included, and what isn’t included – and what signals this could send about OfS’ thinking both on postgraduates and the future of the NSS more generally.

What’s in and what’s out?

First up, the survey invites respondents to answer on their motivations:

Please could you let us know your reasons for studying this taught postgraduate course?

This is sensible – different motivations for different programmes could lead to different factors being important to students and suggest different priorities for different courses. It’s also something we could do with knowing for students at undergraduate level.

It then asks:

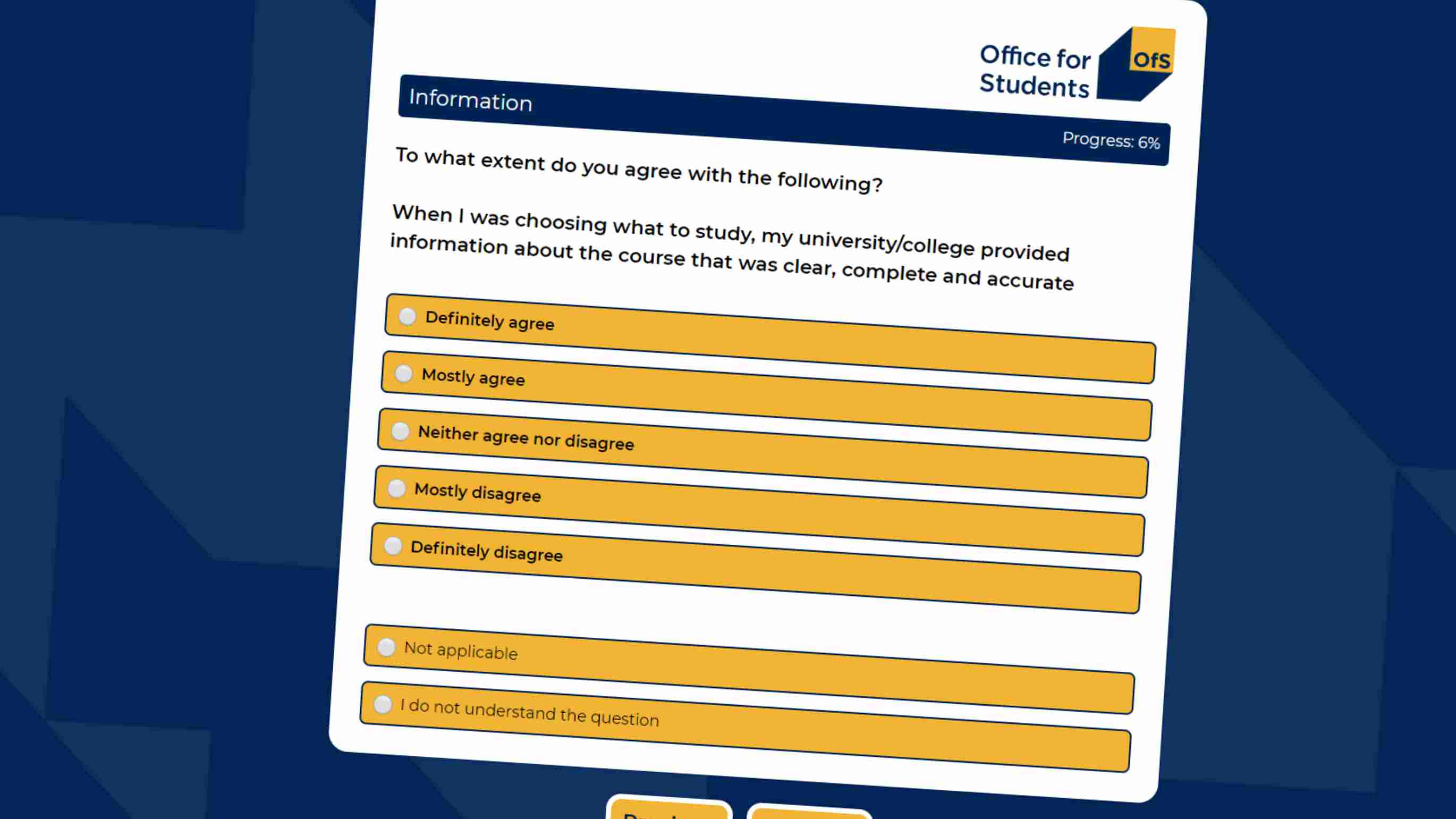

When I was choosing what to study, my university/college provided information about the course that was clear, complete and accurate.

This is definitely something that’s not in the current NSS , and is the first of its wider regulatory duties that OfS appears to be testing in this pilot; this one on consumer information. It’s a pity that the question focuses on course information – so much of what students get upset about (and pay for) is about the services on offer from the institution more generally, particularly where the realities don’t live up to the promises made and expectations set.

Familiarity breeds some contempt

We then start to get some more familiar questions. Many will recognise “Any changes in the course or teaching have been communicated in a timely and effective way” as Question 17 from the “Organisation and Management” section of NSS. Question 15 (“My course is well organised and running smoothly”) also makes an appearance – but Question 14 (“The timetable works efficiently for me”) is conspicuous by its absence. Maybe OfS thinks that timetabling is all hunky dory for PGTs. PGT students juggling work, home and university commitments might disagree.

Teaching quality (at least satisfaction proxies for it) are an obvious focus. A slightly reworded NSS Question 1 appears (“Teaching staff are good at explaining their subject matter”), as does NSS Question 3 (“The course is intellectually stimulating”). NSS questions on staff making the subject interesting and intellectual stimulation get refocussed for postgrads as “My course encourages me to think critically and challenge current perspectives in my chosen field of study”, and “My course facilitates my own independent learning”, and the interestingly phrased “My course has challenged me to achieve my best work”.

There are some interesting additional aspects being tested too. “The objectives of my course are clear”, “My course is up-to-date and informed by current thinking and/or practice” and “My course gives me the opportunity to engage with experts in my field outside my university/college. Including (but not limited to) guest lecturers” all point to assumptions about what postgraduate study is for, and what students expect from it. It would be fascinating to find out why these aspects have been chosen for testing over others.

Fans of the assessment and feedback section of the NSS will be thrilled to find that NSS Q8, 10 and 11 (criteria in advance, timely feedback and helpful comments) all make an appearance. Oddly, this does mean that “Marking and assessment has been fair” is missing – that’s a real pity given the real questions that students have over the efficacy of moderation and external examining, especially when the number of assessments is significantly lower than the average UG course. Similarly “I have appropriate opportunities to give feedback on my course” (which is almost NSS Q23) is there, but NSS equivalents on whether staff value students’ views, clarity on students’ feedback on the course and effectiveness of the students’ union are all missing. Student reps begging their providers to get better at closing the feedback loop will be keen to see a final version that includes action as well as opportunity.

On community, NSS Q22 gets a slight rewording (“I have had the right opportunities to work with other students as part of my course” becomes “My course provides me with the opportunities I need to discuss my work with other students/peers in person or on-line”) but NSS Q21 (“I feel part of a community of staff and students”) is out. This is also a shame – many PGTs (particularly international) report isolation and we have a working hypothesis (which some students’ unions will be testing with research over the summer) that there’s a correlation between NSS Q21 and mental health given our loneliness work earlier in the year.

When it comes to academic support NSS asks about being able to contact staff, which is there – but it also asks about receiving sufficient advice and guidance and getting good advice on study choices. You can see why the latter might not have made the cut, and the former is reworded as “I get the support I need to meet the academic challenges of postgraduate study”. There is also the sensible addition of “supervision for my dissertation or major project meets/met my needs” where the respondent says they are doing one.

New entry!

There are fascinating new questions that may could point to the sorts of things we may end up seeing in NSS itself. “My university/college cares about my mental health and wellbeing” and “There is sufficient provision of student wellbeing and support services to meet my needs” are ways of looking at the mental health issue that might rankle with some. “I feel comfortable being and expressing myself at university/college” appears to be an attempt at testing institutional efforts on equality and diversity – it feels clunky as currently worded but doubtless triangulating with other E&D data will provide an interesting picture. See also “I feel safe at university/college”. If they make the cut, OfS will need to ensure that these types of questions aren’t gamed – there are potential perverse incentives on diversifying the student intake if these questions end up being used in the PG or UG surveys.

Overall satisfaction (NSS Q27) is still there, although a variant is tested using the phrasing (if not the actual scoring scale) from the “Net Promoter Score” – “I would recommend this course to others”. Perhaps most interesting of all, Value for Money is also tested – OfS were always going to find it hard to define, so it’s testing student satisfaction with the concept however students define it, worded as “I believe that my course offers me good value for money”. This also still has a strange fixation on “course” when much of the researched dissatisfaction (and expenditure) is about everything else provided, but it’s an inevitable addition to NSS if OfS is going to get any data at all on one of its four core duties.

As I made clear above, this is only a pilot. The relationship with AdvanceHE’s Postgraduate Taught Experience Survey (PTES) is unclear. These are unlikely to be final questions, and OfS’ official position is that although this and NSS use some of the same questions, they’re not linked – “inclusion in one doesn’t imply a change in the other”. That said, NSS questions “are always under review”. What is fascinating is that the days of a sector-owned and sector-led set of surveys, shaped by endless consultation, are obviously over. The morphing of NSS into a tool that can test things that OfS thinks are important like VFM or student wellbeing is well under way. And whilst to date it’s mainly been used by OfS within the TEF, it’s starting to become clear that NSS will soon be a much more wide ranging tool for testing OfS’ success at meeting its objectives.

A great piece Jim. Thank you for pulling out the differences and similarities. The big concern for me is timing of the surveys. NSS is collected during major assessment periods when students are at their most stressed and pressurised, and PTES starts 5 months in to a year long course. And it doesn’t take account of the different PGT start dates. These metrics are now critical to universities. As a result, the data needs to be collected when students have time to properly reflect on their studies.

Michelle’s point about the timing of surveys is critical. Many PGT programmes are designed to culminate in a major project that brings together all the previous learning. It surely can’t be so difficult to time the survey to be released to individual students as they submit their final assessment – whatever form that might take and whenever it is. This would also allow for multiple start/end dates. Delivering a survey that does what it intends has to be more important than sticking to an administrative time frame that could deliver misleading outcomes.

I agree with the two comments above – if the surveys took place at the end of the programme of study then students would be better able to reflect.

As a note to timing, I attended a HEFCE event on the PGTNSS a while back and the intention was, at the time, to collect at multiple points in the year a bit like GOS. However, how the release and publication of results would work was quite sketchy. Things may have changed quite a lot since then though!