Across the higher education sector in England some have been waiting with bated breath for details of the proposed new Teaching Excellence Framework. Even amidst the multilayered preparations for a new academic year – the planning to induct new students, to teach well and assess effectively, to create a welcoming environment for all – those responsible for education quality have had one eye firmly on the new TEF.

The OfS has now published its proposals along with an invitation to the whole sector to provide feedback on them by 11 December 2025. As an external adviser for some very different types of provider, I’m already hearing a kaleidoscope of changing questions from colleagues. When will our institution or organisation next be assessed if the new TEF is to run on a rolling programme rather than in the same year for everyone? How will the approach to assessing us change now that basic quality requirements are included alongside the assessment of educational ‘excellence’? What should we be doing right now to prepare?

Smaller providers, including further education colleges that offer some higher education programmes, have not previously been required to participate in the TEF assessment. They will now all need to take part, so have a still wider range of questions about the whole process. How onerous will it be? How will data about our educational provision, both quantitative and qualitative, be gathered and assessed? What form will our written submission to the OfS need to take? How will judgements be made?

As a member of TEF assessment panels through TEF’s entire lifecycle to date, I’ve read the proposals with great interest. From an assessor’s point of view, I’ve pondered on how the assessment process will change. Will the new shape of TEF complicate or help streamline the assessment process so that ratings can be fairly awarded for providers of every mission, shape and size?

Panel focus

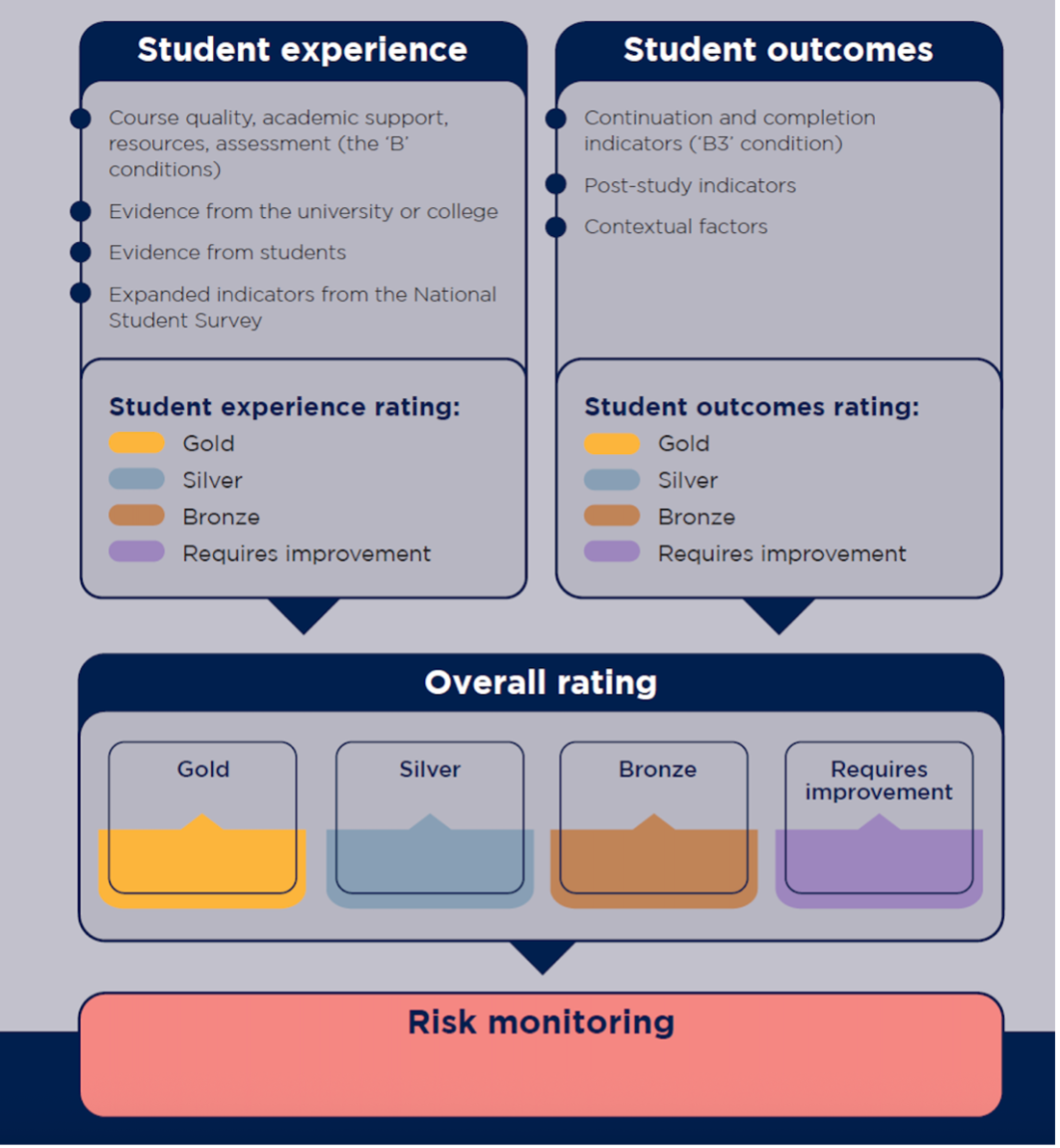

TEF panels have always comprised experts from the whole sector, including academics, professional staff and student representatives. We have looked at the evidence of “teaching excellence” (I think of it as good education) from each provider very carefully. It makes sense that the two main areas of assessment, or “aspects” – student experience and student outcomes – will continue to be discrete areas of focus, leading to two separate ratings of either Gold, Silver, Bronze or Requires Improvement. That’s because the data for each of these can differ quite markedly within a single provider, so it can mislead students to conflate the two judgements.

Another positive continuity is the retention of both quantitative and qualitative evidence. Quantitative data include the detailed datasets provided by OfS, benchmarked against the sector. These are extremely helpful to assessors who can compare the experiences and outcomes of students from different demographics across the full range of providers.

Qualitative data have previously come from 25-page written submissions from each provider, and from written student submissions. There are planned changes afoot for both of these forms of evidence, but they will still remain crucial.

The written provider submissions may be shorter next time. Arguably there is a risk here, as submissions have always enabled assessors to contextualise the larger datasets. Each provider has its own story of setting out to make strategic improvements to their educational provision, and the submissions include both qualitative narrative and internally produced quantitative datasets related to the assessment criteria, or indicators.

However, it’s reasonable for future submissions to be shorter as the student outcomes aspect will rely upon a more nuanced range of data relating to study outcomes as well as progression post-study (proposal 7). While it’s not yet clear what the full range of data will be, this approach is potentially helpful to assessors and to the sector, as students’ backgrounds, subject fields, locations and career plans vary greatly and these data take account of those differences.

The greater focus on improved datasets suggests that there will be less reliance on additional information, previously provided at some length, on how students’ outcomes are being supported. The proof of the pudding for how well students continue with, complete and progress from their studies is in the eating, or rather in the outcomes themselves, rather than the recipes. Outcomes criteria should be clearer in the next TEF in this sense, and more easily applied with consistency.

Another proposed change focuses on how evidence might be more helpfully elicited from students and their representatives (proposal 10). In the last TEF students were invited to submit written evidence, and some student submissions were extremely useful to assessors, focusing on the key criteria and giving a rounded picture of local improvements and areas for development. For understandable reasons, though, students of some providers did not, or could not, make a submission; the huge variations in provider size means that in some contexts students do not have the capacity or opportunity to write up their collective experiences. This variation was challenging for assessors, and anything that can be done to level the playing field for students’ voices next time will be welcomed.

Towards the data limits

Perhaps the greatest challenge for TEF assessors in previous rounds arose when we were faced with a provider with very limited data. OfS’s proposal 9 sets out to address this by varying the assessment approach accordingly. Where these is no statistical confidence in a provider’s NSS data (or no NSS data at all), direct evidence of students’ experiences with that provider will be sought, and where there is insufficient statistical confidence in a provider’s student outcomes, no rating will be awarded for that aspect.

The proposed new approach to the outcomes rating makes great sense – it is so important to avoid reaching for a rating which is not supported by clear evidence. The plan to fill any NSS gap with more direct evidence from students is also logical, although it could run into practical challenges. It will be useful to see suggestions from the sector about how this might be achieved within differing local contexts.

Finally, how might assessment panels be affected by changes to what we are assessing, and the criteria for awarding ratings? First, both aspects will incorporate the requirements of OfS’s B conditions – general ongoing, fundamental conditions of registration. The student experience aspect will now be aligned with B1 (course content and delivery), B2 (resources, academic support and student engagement) and part of B4 (effective assessment). Similarly, the student outcomes B condition will be embedded into the outcomes aspect of the new TEF. This should make even clearer to assessors what is being assessed, where the baseline is and what sits above that line as excellent or outstanding.

And this in turn should make agreeing upon ratings more straightforward. It was not always clear in the previous TEF round where the lines between Requires Improvement and even meeting basic requirements for the sector should be drawn. This applied only to the very small number of providers whose provision did not appear, to put it plainly, to be good enough.

But more clarity in the next round about the connection between baseline requirements should aid assessment processes. Clarification that in the future a Bronze award signifies “meeting the minimum quality requirements” is also welcome. Although the sector will need time to adjust to this change, it is in line with the risk-based approach OfS wants to take to the quality system overall.

The £25,000 question

Underlying all of the questions being asked by providers now is a fundamental one: How we will do next time?

Looking at the proposals with my assessor’s hat on, I can’t predict what will happen for individual providers, but it does seem that the evolved approach to awarding ratings should be more transparent and more consistent. Providers need to continue to understand their education-related own data, both quantitative and qualitative, and commit to a whole institutional approach to embedding improvements, working in close partnership with students.

Assessment panels will continue to take their roles very seriously, to engage fully with agreed criteria, and do everything we can to make a positive contribution to encouraging, recognising and rewarding teaching excellence in higher education.