TEF medals are almost here. Will they help or hinder students making choices?

Jim is an Associate Editor (SUs) at Wonkhe

Tags

To accompany the release, the Office for Students (OfS) published a detailed and updated timeline, along with some guidance on how to communicate the award once confirmed in public on 28 September.

You’ll be thrilled to learn that TEF 2023 logos are available as both RGB and CMYK files and in JPG, EPS and PNG formats. Reversed-out and black and white versions are also available. The joy!

Now that initial results are in the wild, “representations” can be made – a kind of of appeal. Representations can be made if providers consider the panel judgements “do not appropriately reflect the evidence the panel had available to it”. If only students were afforded the same privilege when challenging the academic judgement leading to their medal.

The panel then considers any representations and decides if the provisional rating decision remains appropriate, or should be amended, prior to deciding the final TEF rating. We’ll be able to tell many that have appealed – some still being considered won’t be announced on Sep 28.

As a reminder, officially the TEF “encourages universities and colleges to make improvements to retain or improve their rating in future”. There will be a variety of views about whether that’s working, at least over the aspects it looks at.

But that’s not the only purpose.

It’s also about “shining a spotlight on the quality of courses” and “informing student choice”. In other words it sends a signal – that if you go there, X is likely to happen. But can the signal be relied upon – does it inform choice, or does it actually obscure it?

I’m mainly assessing it on its own terms here, there’s plenty of wider critique on the site if that’s your fancy.

1. It’s aimed at the wrong students. Arguably, those most at risk of not getting decent information are international PGTs – and it’s an undergraduate exercise.

I’d also argue that those who are under pressure to “spend” their UCAS points on the highest tariff course possible are vulnerable to making bad choices – but the exercise won’t really help them.

And those that can’t shop around because they have to go local aren’t served by it either. So it serves a group who already have the most information and who already use more granular information to make choices – with the added danger that it “informs” choice for others when it hasn’t assessed that provision.

2. It relies on old data. By the time the medals emerge, new NSS data will already be more up to date – and by the time the medal expires, it will be using some very old data indeed – roughly eight years old. Lots of change can happen in that time on both experience and outcomes.

3. A given subject area may well be very different to the mean averages. In fact a whole subject area might be below the “minimum” B3 outcomes threshold and because OfS hasn’t intervened thus far, the university could get, say, Silver on outcomes. That feels… sub-optimal.

4. Only wonks would know that the metrics driving half of it are benchmarked in groups. The suggested copy and logos supplied by OfS make no mention of that. That feels seriously misleading, even if it feels “fairer” within the sector.

5. It doesn’t cover the whole student experience. In fact it doesn’t even cover the whole student academic experience. A university could be doing very badly indeed on an important aspect – organisation and management for example – but because the model only uses five of the NSS categories, that wouldn’t impact the rating.

6. Medals will emerge too late. Providers ending up with “requires improvement”, for example, can appeal and effectively delay telling the world until the fee liability deadline kicks in. So much for helping with choice!

7. Officially universities have to communicate their whole rating. Both the headline and the two halves of experience and outcomes have to be mentioned. But I suspect many will display it like this:

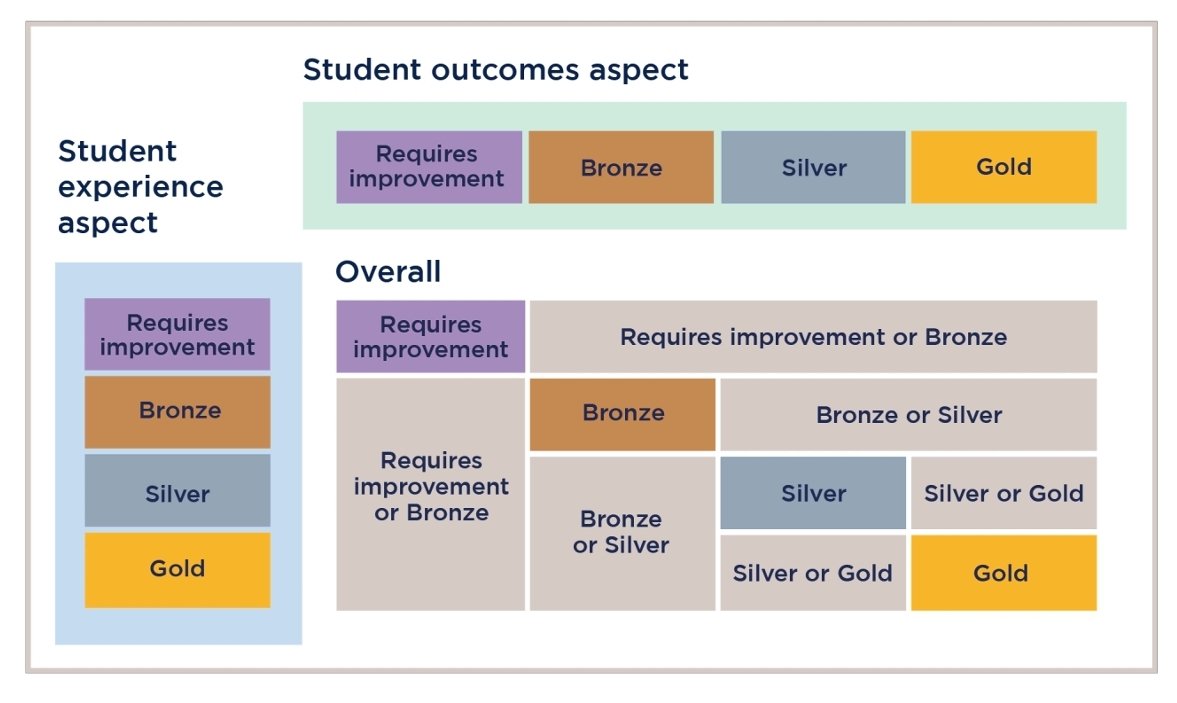

8. The floor is lava: Even in the sector many think there’s a floor – you can’t do better than either half of experience or outcomes. But that’s not true. This…

… can lead to this:

9. The unit of assessment is the provider. So for a provider with 100 students you might have reasonable confidence that what you’ll experience is related to the medal. But if you’re looking at a huge provider, entire subject areas of many thousands of students could be Bronze if assessed separately but the overall medal may be Silver.

In theory OfS did direct panels to look for consistency – but the guidance felt… broad.

Crucially, the bigger the university, the easier it is to use averages to hide bad experience or outcomes. And the less useful the medal is as a result – even though the medal in theory will have been depended on by more students. From the point of view of the student, it’s the inverse of risk-based.

10. How are partners considered? Finally, OfS says “Where a provider is involved in partnership arrangements, it should display only its own TEF ratings, not those of its partners”.

If you run a franchised course I *think* that means you don’t have to tell students that the franchising provider is one that “Requires improvement”, even though students actually enrol with that franchising university.

It also appears to mean a university with Gold that franchises to another provider doesn’t have to tell students they might be enrolling into a provider that “Requires Improvement.”

Overall this doesn’t feel like a scheme that is especially helpful for informing student choice meaningfully. I’d ban universities from telling anyone their rating, it’s so potentially misleading.

Of course if you did that, it would lose the potency it has as an improvement exercise. Its logics depend on giving information to students to influence choices. But in doing so, it has the potential to obscure and cloud those choices. On its own terms, that leaves it worse than unhelpful – it’s outright dangerous.

Good spot about partners. That might need unpacking by OfS a bit more. B6 confirms you have to have 500 of your own students (that’s why a load of providers are not in). So if you’re not eligible to be in TEF, those providers don’t show a TEF rating anywhere. At least one provider has 500 students, but a larger number of its students are franchised in from other providers. So it shows its TEF rating but not that of the courses whose experience and outcomes were assessed at the other providers. Earlier in the guidance it says you should… Read more »

Point 4 about the benchmarking of data is particularly relevant/important now that we have separate judgments on Student Experience and Student Outcomes. It’s going to be very easy for someone to look at the Student Outcomes medals and come away thinking that student outcomes in absolute terms are better somewhere with a Gold rather than a Silver, or either of those compared to a Bronze. The benchmarking process means that this isn’t necessarily the case. Despite what the Pearce Review said, TEF is still as much about public information as it is about quality enhancement but it’s public information that… Read more »