Over the last four years I’ve spent a lot of time trying to expose the weaknesses of the global university rankings as a reliable way of evaluating the contribution of higher education institutions and enabling comparisons between them.

It’s not a difficult job: the inadequacies of the rankings are visible to the naked eye. It’s not even that difficult to persuade senior leaders of this. They’re not idiots – they can spot a shonky methodology at 100 paces.

What proves to be difficult is to persuade senior leaders to actually act on this knowledge. To accept that yes, the rankings are statistically invalid and deeply unhelpful to all actors in the HE system, but to then actually take some kind of publicly critical stand.

But back in 1995 that is exactly what the former President of Reed College, Steven Koblik, famously did. And this book, written by Koblik’s successor, Colin Diver, tells us all about it.

You can see why I was excited to read it.

What happens when you refuse to be ranked?

So, Breaking Ranks: How the rankings industry rules higher education and what to do about it is the fascinating first-hand experience of Reed’s immediate past President having inherited an institution that decided “it would no longer be complicit in an enterprise it viewed as antithetical to its core values” and began withholding data from the rankings.

I have to admit I was a smidge disappointed, given the expansive and promising title, to learn in the opening chapters of the book that the “rankings industry” it refers to is almost exclusively the US News & World Report (USNWR) Ranking – and the “Best US Colleges” edition at that. And the higher education it refers to, is unapologetically US higher education. And consequentially, the what to do about it means what to do about the USNWR. However, there is clearly common ground between the USNWR ranking portfolio and other ranking agencies’ offerings, so those with more broad-ranging interests will still find it informative – whilst also receiving a detailed grounding in the USNWR.

Rankings and how we relate to them

The book begins by describing the evolution of rankings and their increasingly negative influence on the US HE sector. As a former Dean of the University of Pennsylvania Law School prior to his role at Reed, Diver has enough experience to have seen this rise first-hand, yielding many interesting and humorous anecdotes. He also unpacks and systematically shoots down some of the approaches used by the USNWR and other ‘rankocrats’.

Diver asks whether university rankings measure and perpetuate wealth. (Spoiler alert: yes they do). HE has been reduced to “a competition for prestige” he argues, with rankings becoming ”the primary signifier”. He proceeds to critique three proxies for prestige commonly used by rankings including an enjoyable rant about “unscientific straw polls”.

We then move on to investigate a particular feature of USNWR College ranking, the way it which “judges colleges by who gets in”. This may be of less interest to the international reader, however, the chapter detailing the games colleges play to win on these measures left me open-mouthed. And I’m not new to this lark.

The conclusion attempts to identify what HE institutions do offer the world which might actually be worth measuring and offers some advice for those seeking to loosen the rankings’ grip. More on that below.

As you might expect from a scholar of Diver’s standing, the book is well-structured, his arguments are well-built, and his writing style is very accessible. What you might not expect is his honesty. He is quite open about how in former roles he has himself engaged in less savoury ranking-climbing behaviours (e.g., hesitating to admit talented poor students for fear they would drag down the institution’s average LSAT score). He is also quite honest about the negative impacts of not engaging in such behaviours (watching others climb via the use of distasteful methods, whilst you fall back due to the lower scores the ranking gods bestow upon you if you don’t feed them fresh data).

For me, it is these lived experiences that form this book’s most original and valuable contribution. Many HE professional services staff are desperate to engage with their senior leaders on these issues; here is a senior leader who is desperate to do the same. Getting the opportunity to watch a university president think these matters through; to see the rationale that led to them taking a stand, and the impact that taking that stand had on their institution is gold-dust. And, I cannot tell a lie, it gives me hope.

A four step plan

His closing admonishments to both students and universities are simple: ignore the rankings if you can, but if you can’t, go to the opposite extreme and take them really seriously. That is, study them in so much detail that you truly understand the contribution they can make to answering any question you might have about the quality of a HE institution. Convinced this will dilute any confidence folk might have in the rankings, he even proposes an alternative decision-making approach based on allocating institutions points based on the decision-maker’s priorities. As a protagonist of starting with what you value when seeking to evaluate, I found myself nodding vigorously.

For institutions inspired by Reed’s anti-ranking stance, Diver offers a sensible four-stage withdrawal process. This consists of:

- not filling out peer reputation surveys,

- not publicising rankings you consider illegitimate,

- celebrating rankings that truly reflect your values, and

- giving everyone equal access to your data (rather than giving it to rankers for their exclusive use).

Hope for the rankings industry?

The big surprise for me, given the gritty exposé of the rankings’ methods and “grimpacts” contained in this book, was that Diver doesn’t conclude by dismissing the concept of rankings altogether. Despite the limitations inherent in all the indicators he dismantles, he seems to remain hopeful that an institution’s contribution to preparing students for a “genuinely fulfilling life” might one day be rigorously measured and that someone “will actually try to rank colleges accordingly”. This seems to me neither feasible nor desirable.

As I’ve said before, rankings are only necessary where there is scarcity: one job on offer, or one prize. For every other form of evaluation there are far more nuanced methods including profiles, data dashboards, and qualitative approaches. Indeed, as the Chair of the INORMS Research Evaluation Group which is itself currently developing an initiative through which institutions can describe the many and varied ways in which they are More Than Our Rank, I couldn’t help but think that Reed College would be the perfect exemplar for this.

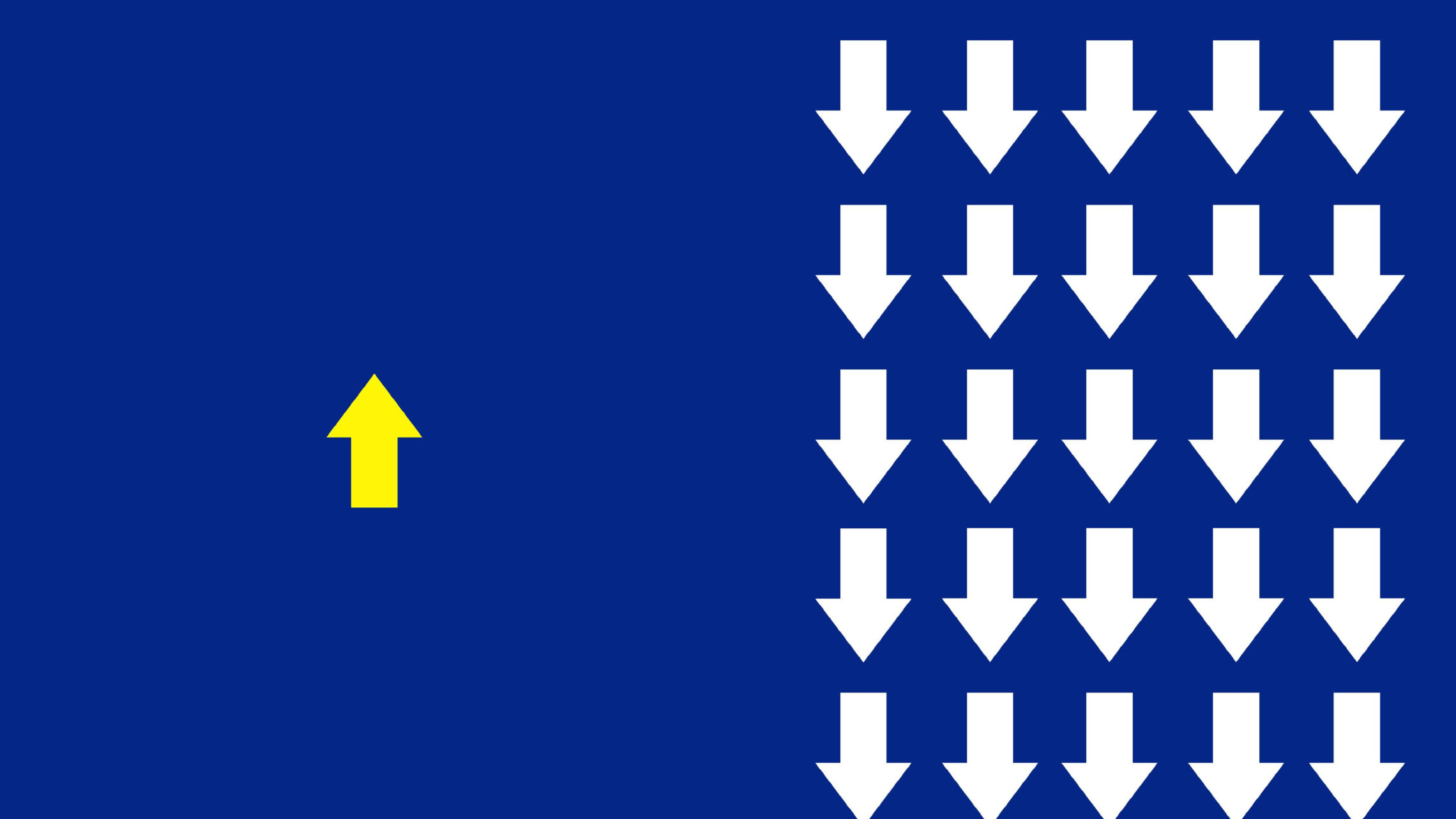

Back in 2007, Reed’s actions kick-started something of a resistance movement amongst 62 other US colleges. (We’re now seeing something similar amongst Chinese universities in response to the global rankings). Unfortunately this movement didn’t really gain momentum, but perhaps as other initiatives arise such as More Than Our Rank, and the EUA/Science Europe Reforming Research Assessment Agreement which forbids the use of rankings in researcher assessment, now is the time, and this book is the touch-paper?

Breaking Ranks is published by Johns Hopkins University Press and is priced at $27.95.

Thank you!

You may be interested in the following book too:

Steffen Mau (2019) The Metric Society: On the Quantification of the Social (transl. from German)

ISBN: 978-1-509-53041-0