The credibility of the TEF been undermined by the allocation of gold awards to three further education colleges with poor Ofsted grades, according to a recent Times report which also highlighted Southampton vice chancellor Sir Christopher Snowdon’s disdain for the whole exercise.

To make matters worse, the leadership at one of the colleges had been described as inadequate by the Further Education Commissioner! Quite who drew this line of reasoning is unclear, but it doesn’t really matter. To suggest that TEF results should be aligned with Ofsted outcomes is an invalid argument.

The teaching considered by the TEF is not inspected by Ofsted, whose remit extends to those ‘programmes outside of higher education’. Of course, it’s possible to infer from a poor Ofsted grading for teaching quality that higher education provision at the same institution might also be of poor quality, but it’s merely conjecture.

As someone who helps colleges develop their curriculum, it is common to find institutions where higher education and further provision are two distinct activities, rather than aligned. Internal rates of progression from Level 3 to 4 can often be unhealthy because teaching cultures, processes, or views are incongruent between higher and further education provision. This suggests that making too many inferences about higher education quality in colleges from the state of general 16 to 18 provision – and Ofsted’s assessment of it – would be a mistake.

That the Gold winning City of Liverpool College was subject to intervention by Further Education Commissioner David Collins in 2013, primarily due to its financial troubles, is similarly a red herring. There is no evidence available to suggest a causal relationship between a college’s general finances and the quality of teaching in a higher education classroom. Indeed, given the continued health of higher education funding in comparison to the recent carnage inflicted on further education funding, the link is completely inappropriate.

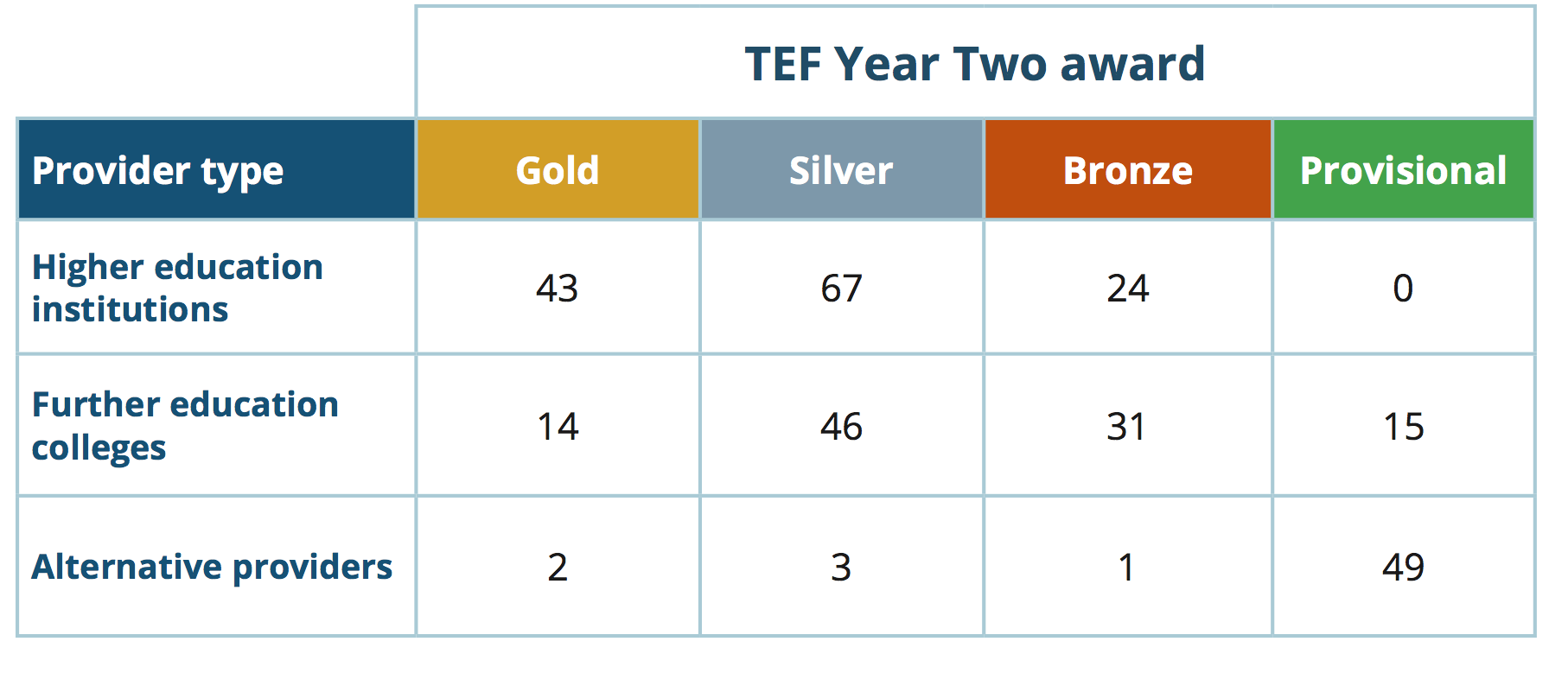

Indeed, the available evidence we have suggests that many colleges will have been good value for their Gold awards (of which they were awarded proportionally fewer, by the way, than universities).

TEF results are heavily influenced by NSS, ILR/HESA and DLHE data. Colleges do not take part in the DLHE exercise. Robust analysis of colleges’ NSS results is, regrettably, scarce. Yet HEFCE data on trends between 2005 and 2013 shows that out of the twelve NSS 2016 questions that that are used to inform this first TEF, franchised (college) provision outperformed non-franchised provision in eight of them.

In 2015, colleges topped the NSS tables for satisfaction. In the same year, higher education students taught in colleges were more satisfied with assessment and feedback than their university counterparts. More specifically and unsurprisingly, the colleges awarded TEF golds scored well in the NSS 2016 teaching, assessment and support sections.

It is a point made elsewhere but worth repeating: it is difficult to criticise the TEF after the fact without looking like you are chewing sour grapes. Pointing to the performance of others, rather than fundamental flaws in the process per se, exacerbates this. There appears to be substance in Sir Christopher’s criticism of the balance between core metric scores and the university’s submission in evaluating Southampton’s performance, and in the variation in drop-out rate benchmarks.

But it’s time for the media – and those who might be inclined to join them in the sector – to stop using colleges as a basis for their criticisms of TEF. There is instead plenty of evidence to suggest that colleges were disadvantaged in the exercise compared to universities.

More general points about TEF as a proxy for teaching quality – as the TEF chair says himself, these are measures based on ‘some outcomes of teaching’ – are irrefutable. But they are undercut when we make unfounded attacks on other institutions. No-one likes a sore loser.

tag:twitter.com,2013:885417296562401280_favorited_by_3092489254

Mike Drayson

https://twitter.com/Wonkhe/status/885417296562401280#favorited-by-3092489254

tag:twitter.com,2013:885417296562401280_favorited_by_17674931

Mike Hamlyn

https://twitter.com/Wonkhe/status/885417296562401280#favorited-by-17674931

tag:twitter.com,2013:885417296562401280_favorited_by_296796716

Mike Ratcliffe

https://twitter.com/Wonkhe/status/885417296562401280#favorited-by-296796716

tag:twitter.com,2013:885417296562401280_favorited_by_275455302

Mary Crossan

https://twitter.com/Wonkhe/status/885417296562401280#favorited-by-275455302

“Colleges do not take part in the DLHE exercise”- Yes they do. However the data from this is very much dependant on cohort size.

It should also be considered that the TEF did not fairly represent a colleges entire provision. Many colleges have larger overall numbers of part-time courses that were not included in the TEF matrix, for a number of reasons. College TEF results may have been very different (and not necessarily worse) if these courses/students had been considered.